Shripriya is defining the guidelines for the review process implementation in her company. Which of the following statements is LEAST likely to have been recommended by her?

Which of the following statements about static testing and dynamic testing is true?

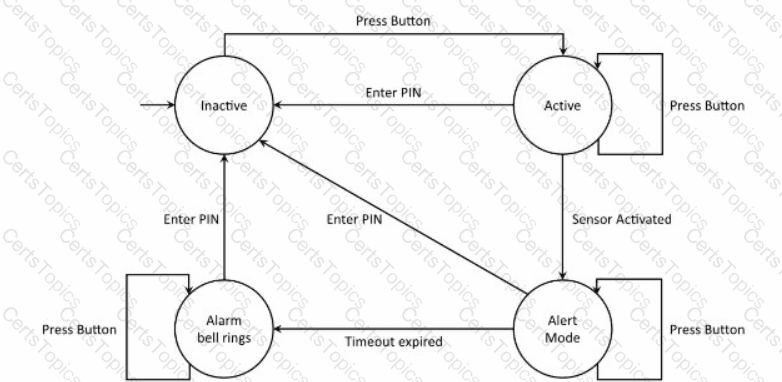

An anti-intrusion system is battery powered and is activated by pressing the only available button. To deactivate the system, the operator must enter a PIN code. The system will stay in alert mode within a configurable timeout and an alarm bell will ring if the system is not deactivated before the timeout expires. The following state transition diagram describes the behavior of the system:

What is the minimum number of test cases needed to cover every unique sequence of exactly 4 states/3 transitions starting and ending in the “Inactive” state? (note that “Inactive” is not a final state in the diagram)

Which of the following statement about the shift-left approach is false?