What is a benefit or using network aliases in ArubaOS firewall policies?

You can associate a reputation score with the network alias to create rules that filler traffic based on reputation rather than IP.

You can use the aliases to translate client IP addresses to other IP addresses on the other side of the firewall

You can adjust the IP addresses in the aliases, and the rules using those aliases automatically update

You can use the aliases to conceal the true IP addresses of servers from potentially untrusted clients.

In ArubaOS firewall policies, using network aliases allows administrators to manage groups of IP addresses more efficiently. By associating multiple IPs with a single alias, any changes made to the alias (like adding or removing IP addresses) are automatically reflected in all firewall rules that reference that alias. This significantly simplifies the management of complex rulesets and ensures consistency across security policies, reducing administrative overhead and minimizing the risk of errors.

You have a network with AOS-CX switches for which HPE Aruba Networking ClearPass Policy Manager (CPPM) acts as the TACACS+ server. When an admin authenticates, CPPM sends a response with:

Aruba-Priv-Admin-User = 1

TACACS+ privilege level = 15What happens to the user?

The user receives auditors access.

The user receives no access.

The user receives administrators access.

The user receives operators access.

HPE Aruba Networking AOS-CX switches support TACACS+ for administrative authentication, where ClearPass Policy Manager (CPPM) can act as the TACACS+ server. When an admin authenticates, CPPM sends a TACACS+ response that includes attributes such as the TACACS+ privilege level and vendor-specific attributes (VSAs) like Aruba-Priv-Admin-User.

In this scenario, CPPM sends:

TACACS+ privilege level = 15: In TACACS+, privilege level 15 is the highest level and typically grants full administrative access (equivalent to a superuser or administrator role).

Aruba-Priv-Admin-User = 1: This Aruba-specific VSA indicates that the user should be granted the highest level of administrative access on the switch.

On AOS-CX switches, the privilege level 15 maps to the administrator role, which provides full read-write access to all switch functions. The Aruba-Priv-Admin-User = 1 attribute reinforces this by explicitly assigning the admin role, ensuring the user has unrestricted access.

Option A, "The user receives auditors access," is incorrect because auditors typically have read-only access, which corresponds to a lower privilege level (e.g., 1 or 3) on AOS-CX switches.

Option B, "The user receives no access," is incorrect because the authentication was successful, and CPPM sent a response granting access with privilege level 15.

Option D, "The user receives operators access," is incorrect because operators typically have a lower privilege level (e.g., 5 or 7), which provides limited access compared to an administrator.

The HPE Aruba Networking AOS-CX 10.12 Security Guide states:

"When using TACACS+ for administrative authentication, the switch interprets the privilege level returned by the TACACS+ server. A privilege level of 15 maps to the administrator role, granting full read-write access to all switch functions. The Aruba-Priv-Admin-User VSA, when set to 1, explicitly assigns the admin role, ensuring the user has unrestricted access." (Page 189, TACACS+ Authentication Section)

Additionally, the HPE Aruba Networking ClearPass Policy Manager 6.11 User Guide notes:

"ClearPass can send the Aruba-Priv-Admin-User VSA in a TACACS+ response to specify the administrative role on Aruba devices. A value of 1 indicates the admin role, which provides full administrative privileges." (Page 312, TACACS+ Enforcement Section)

What is one difference between EAP-Tunneled Layer Security (EAP-TLS) and Protected EAP (PEAP)?

EAP-TLS begins with the establishment of a TLS tunnel, but PEAP does not use a TLS tunnel as part of its process.

EAP-TLS requires the supplicant to authenticate with a certificate, but PEAP allows the supplicant to use a username and password.

EAP-TLS creates a TLS tunnel for transmitting user credentials, while PEAP authenticates the server and supplicant during a TLS handshake.

EAP-TLS creates a TLS tunnel for transmitting user credentials securely, while PEAP protects user credentials with TKIP encryption.

EAP-TLS (Extensible Authentication Protocol - Transport Layer Security) and PEAP (Protected EAP) are two EAP methods used for 802.1X authentication in wireless networks, such as those configured with WPA3-Enterprise on HPE Aruba Networking solutions. Both methods are commonly used with ClearPass Policy Manager (CPPM) for secure authentication.

EAP-TLS:

Requires both the supplicant (client) and the server (e.g., CPPM) to present a valid certificate during authentication.

Establishes a TLS tunnel to secure the authentication process, but the primary authentication mechanism is the mutual certificate exchange. The client’s certificate is used to authenticate the client, and the server’s certificate authenticates the server.

PEAP:

Requires only the server to present a certificate to authenticate itself to the client.

Establishes a TLS tunnel to secure the authentication process, within which the client authenticates using a secondary method, typically a username and password (e.g., via MS-CHAPv2 or EAP-GTC).

Option A, "EAP-TLS begins with the establishment of a TLS tunnel, but PEAP does not use a TLS tunnel as part of its process," is incorrect. Both EAP-TLS and PEAP establish a TLS tunnel. In EAP-TLS, the TLS tunnel is used for the mutual certificate exchange, while in PEAP, the TLS tunnel protects the inner authentication (e.g., username/password).

Option B, "EAP-TLS requires the supplicant to authenticate with a certificate, but PEAP allows the supplicant to use a username and password," is correct. This is a key difference: EAP-TLS mandates certificate-based authentication for the client, while PEAP allows the client to authenticate with a username and password inside the TLS tunnel, making PEAP more flexible for environments where client certificates are not deployed.

Option C, "EAP-TLS creates a TLS tunnel for transmitting user credentials, while PEAP authenticates the server and supplicant during a TLS handshake," is incorrect. Both methods use a TLS tunnel, and both authenticate the server during the TLS handshake (using the server’s certificate). In EAP-TLS, the client’s certificate is also part of the TLS handshake, while in PEAP, the client’s credentials (username/password) are sent inside the tunnel after the handshake.

Option D, "EAP-TLS creates a TLS tunnel for transmitting user credentials securely, while PEAP protects user credentials with TKIP encryption," is incorrect. PEAP does not use TKIP (Temporal Key Integrity Protocol) for protecting credentials; TKIP is a legacy encryption method used in WPA/WPA2 for wireless data encryption, not for EAP authentication. PEAP uses the TLS tunnel to protect the inner authentication credentials.

The HPE Aruba Networking ClearPass Policy Manager 6.11 User Guide states:

"EAP-TLS requires both the supplicant and the server to present a valid certificate for mutual authentication. The supplicant authenticates using its certificate, and the process is secured within a TLS tunnel. In contrast, PEAP requires only the server to present a certificate to establish a TLS tunnel, within which the supplicant can authenticate using a username and password (e.g., via MS-CHAPv2 or EAP-GTC). This makes PEAP more suitable for environments where client certificates are not deployed." (Page 292, EAP Methods Section)

Additionally, the HPE Aruba Networking Wireless Security Guide notes:

"A key difference between EAP-TLS and PEAP is the client authentication method. EAP-TLS mandates that the client authenticate with a certificate, requiring certificate deployment on all clients. PEAP allows the client to authenticate with a username and password inside a TLS tunnel, making it easier to deploy in environments without client certificates." (Page 40, 802.1X Authentication Methods Section)

What does the NIST model for digital forensics define?

how to define access control policies that will properly protect a company's most sensitive data and digital resources

how to properly collect, examine, and analyze logs and other data, in order to use it as evidence in a security investigation

which types of architecture and security policies are best equipped to help companies establish a Zero Trust Network (ZTN)

which data encryption and authentication algorithms are suitable for enterprise networks in a world that is moving toward quantum computing

The National Institute of Standards and Technology (NIST) provides guidelines on digital forensics, which include methodologies for properly collecting, examining, and analyzing digital evidence. This framework helps ensure that digital evidence is handled in a manner that preserves its integrity and maintains its admissibility in legal proceedings:

Digital Forensics Process: This process involves steps to ensure that data collected from digital sources can be used reliably in investigations and court cases, addressing chain-of-custody issues, proper evidence handling, and detailed documentation of forensic procedures.

How does the AOS firewall determine which rules to apply to a specific client's traffic?

The firewall applies the rules in policies associated with the client's user role.

The firewall applies every rule that includes the client's IP address as the source.

The firewall applies the rules in policies associated with the client's WLAN.

The firewall applies every rule that includes the client's IP address as the source or destination.

In an AOS-8 architecture, the Mobility Controller (MC) includes a stateful firewall that enforces policies on client traffic. The firewall uses user roles to apply policies, allowing granular control over traffic based on the client’s identity and context.

User Roles: In AOS-8, each client is assigned a user role after authentication (e.g., via 802.1X, MAC authentication, or captive portal). The user role contains firewall policies (rules) that define what traffic is allowed or denied for clients in that role. For example, a "guest" role might allow only HTTP/HTTPS traffic, while an "employee" role might allow broader access.

Option A, "The firewall applies the rules in policies associated with the client's user role," is correct. The AOS firewall evaluates traffic based on the user role assigned to the client. Each role has a set of policies (rules) that are applied in order, and the first matching rule determines the action (permit or deny). For example, if a client is in the "employee" role, the firewall applies the rules defined in the "employee" role’s policy.

Option B, "The firewall applies every rule that includes the client's IP address as the source," is incorrect. The firewall does not apply rules based solely on the client’s IP address; it uses the user role. Rules within a role may include IP addresses, but the role determines which rules are evaluated.

Option C, "The firewall applies the rules in policies associated with the client's WLAN," is incorrect. While the WLAN configuration defines the initial role for clients (e.g., the default 802.1X role), the firewall applies rules based on the client’s current user role, which may change after authentication (e.g., via a RADIUS VSA like Aruba-User-Role).

Option D, "The firewall applies every rule that includes the client's IP address as the source or destination," is incorrect for the same reason as Option B. The firewall uses the user role to determine which rules to apply, not just the client’s IP address.

The HPE Aruba Networking AOS-8 8.11 User Guide states:

"The AOS firewall on the Mobility Controller applies rules based on the user role assigned to a client. Each user role contains a set of firewall policies that define the allowed or denied traffic for clients in that role. For example, a policy in the ‘employee’ role might include a rule like ipv4 user any http permit to allow HTTP traffic. The firewall evaluates the rules in the client’s role in order, and the first matching rule determines the action for the traffic." (Page 325, Firewall Policies Section)

Additionally, the HPE Aruba Networking Security Guide notes:

"User roles in AOS-8 provide a powerful mechanism for firewall policy enforcement. The firewall determines which rules to apply to a client’s traffic by looking at the policies associated with the client’s user role, which is assigned during authentication or via a RADIUS VSA like Aruba-User-Role." (Page 50, Role-Based Access Control Section)

What is one benefit of enabling Enhanced Secure mode on an ArubaOS-Switch?

Control Plane policing rate limits edge ports to mitigate DoS attacks on network servers.

A self-signed certificate is automatically added to the switch trusted platform module (TPM).

Insecure algorithms for protocol such as SSH are automatically disabled.

All interfaces have 802.1X authentication enabled on them by default.

In the context of ArubaOS-Switches, enabling Enhanced Secure mode has several benefits, one of which includes disabling insecure algorithms for protocols such as SSH. This is in line with security best practices, as older, less secure algorithms are known to be vulnerable to various types of cryptographic attacks. When Enhanced Secure mode is enabled, the switch automatically restricts the use of such algorithms, thereby enhancing the security of management access.

What is symmetric encryption?

It simultaneously creates ciphertext and a same-size MAC.

It any form of encryption mat ensures that thee ciphertext Is the same length as the plaintext.

It uses the same key to encrypt plaintext as to decrypt ciphertext.

It uses a Key that is double the size of the message which it encrypts.

Symmetric encryption is a type of encryption where the same key is used to encrypt and decrypt the message. It's called "symmetric" because the key used for encryption is identical to the key used for decryption. The data, or plaintext, is transformed into ciphertext during encryption, and then the same key is used to revert the ciphertext back to plaintext during decryption. It is a straightforward method but requires secure handling and exchange of the encryption key.

A company with 439 employees wants to deploy an open WLAN for guests. The company wants the experience to be as follows:

*Guests select the WLAN and connect without having to enter a password.

*Guests are redirected to a welcome web page and log in.

The company also wants to provide encryption for the network for devices that are capable. Which security options should you implement for the WLAN?

Opportunistic Wireless Encryption (OWE) and WPA3-Personal

WPA3-Personal and MAC-Auth

Captive portal and Opportunistic Wireless Encryption (OWE) in transition mode

Captive portal and WPA3-Personal

Opportunistic Wireless Encryption (OWE) provides encrypted communications on open Wi-Fi networks, which addresses the company's desire to have encryption without requiring a password for guests. It can work in transition mode, which allows for the use of OWE by clients that support it, while still permitting legacy clients to connect without encryption. Combining this with a captive portal enables the desired welcome web page for guests to log in.

You have been asked to rind logs related to port authentication on an ArubaOS-CX switch for events logged in the past several hours But. you are having trouble searching through the logs What is one approach that you can take to find the relevant logs?

Add the "-C and *-c port-access" options to the "show logging" command.

Configure a logging Tiller for the "port-access" category, and apply that filter globally.

Enable debugging for "portaccess" to move the relevant logs to a buffer.

Specify a logging facility that selects for "port-access" messages.

In ArubaOS-CX, managing and searching logs can be crucial for tracking and diagnosing issues related to network operations such as port authentication. To efficiently find logs related to port authentication, configuring a logging filter specifically for this category is highly effective.

Logging Filter Configuration: In ArubaOS-CX, you can configure logging filters to refine the logs that are collected and viewed. By setting up a filter for the "port-access" category, you focus the logging system to only capture and display entries related to port authentication events. This approach reduces the volume of log data to sift through, making it easier to identify relevant issues.

Global Application of Filter: Applying the filter globally ensures that all relevant log messages, regardless of their origin within the switch's modules or interfaces, are captured under the specified category. This global application is crucial for comprehensive monitoring across the entire device.

Alternative Options and Their Evaluation:

Option A: Adding "-C and *-c port-access" to the "show logging" command is not a standard command format in ArubaOS-CX for filtering logs directly through the show command.

Option C: Enabling debugging for "portaccess" indeed increases the detail of logs but primarily serves to provide real-time diagnostic information rather than filtering existing logs.

Option D: Specifying a logging facility focuses on routing logs to different destinations or subsystems and does not inherently filter by log category like port-access.

You have been authorized to use containment to respond to rogue APs detected by ArubaOS Wireless Intrusion Prevention (WIP). What is a consideration for using tarpit containment versus traditional wireless containment?

Rather than function wirelessly, tarpit containment sends ARP frames over the wired network to poison rogue APs ARP tables and prevent them from transmitting on the wired network.

Rather than target all clients connected to rogue APs, tarpit containment targets only authorized clients that are connected to a rogue AP, reducing the chance of negative effects on neighbors.

Tarpit containment does not require an RF Protect license to function, while traditional wireless containment does.

Tarpit containment forms associations with clients to enable more effective containment with fewer disassociation frames than traditional wireless containment.

Tarpit containment is a method used in ArubaOS Wireless Intrusion Prevention (WIP) to contain rogue APs. It differs from traditional wireless containment in several ways, particularly in how it interacts with clients and manages network resources.

Tarpit containment works by spoofing frames from an AP to confuse a client about its association. It forces the client to associate with a fake channel or BSSID, which is more efficient than rogue containment via repeated de-authorization requests. This method is designed to be less disruptive and more resource-efficient1.

Here’s why the other options are not correct:

Option A is incorrect because tarpit containment does not involve sending ARP frames over the wired network. It operates wirelessly by creating a fake channel or BSSID.

Option B is incorrect because tarpit containment does not selectively target authorized clients; it affects all clients connected to the rogue AP.

Option C is incorrect because tarpit containment does require an RF Protect license to function2.

Therefore, Option D is the correct answer. Tarpit containment is more effective at keeping clients off the network with fewer disassociation frames than traditional wireless containment. It achieves this by forming associations with clients, which leads to a more efficient use of airtime and reduces the chance of negative effects on legitimate network users12.

This company has AOS-CX switches. The exhibit shows one access layer switch, Switch-2, as an example, but the campus actually has more switches. Switch-1 is a core switch that acts as the default router for end-user devices.

What is a correct way to configure the switches to protect against exploits from untrusted end-user devices?

On Switch-1, enable ARP inspection on VLAN 100 and DHCP snooping on VLANs 15 and 25.

On Switch-2, enable DHCP snooping globally and on VLANs 15 and 25. Later, enable ARP inspection on the same VLANs.

On Switch-2, enable BPDU filtering on all edge ports in order to prevent eavesdropping attacks by untrusted devices.

On Switch-1, enable DHCP snooping on VLAN 100 and ARP inspection on VLANs 15 and 25.

The scenario involves AOS-CX switches in a two-tier topology with Switch-1 as the core switch (default router) on VLAN 100 and Switch-2 as an access layer switch with VLANs 15 and 25, where end-user devices connect. The goal is to protect against exploits from untrusted end-user devices, such as DHCP spoofing or ARP poisoning attacks, which are common threats in access layer networks.

DHCP Snooping: This feature protects against rogue DHCP servers by filtering DHCP messages. It should be enabled on the access layer switch (Switch-2) where end-user devices connect, specifically on the VLANs where these devices reside (VLANs 15 and 25). DHCP snooping builds a binding table of legitimate IP-to-MAC mappings, which can be used by other features like ARP inspection.

ARP Inspection: This feature prevents ARP poisoning attacks by validating ARP packets against the DHCP snooping binding table. It should also be enabled on the access layer switch (Switch-2) on VLANs 15 and 25, where untrusted devices are connected.

Option B, "On Switch-2, enable DHCP snooping globally and on VLANs 15 and 25. Later, enable ARP inspection on the same VLANs," is correct. DHCP snooping must be enabled first to build the binding table, and then ARP inspection can use this table to validate ARP packets. This configuration should be applied on Switch-2, the access layer switch, because that’s where untrusted end-user devices connect.

Option A, "On Switch-1, enable ARP inspection on VLAN 100 and DHCP snooping on VLANs 15 and 25," is incorrect. Switch-1 is the core switch and does not directly connect to end-user devices on VLANs 15 and 25. DHCP snooping and ARP inspection should be enabled on the access layer switch (Switch-2) where the devices reside. Additionally, enabling ARP inspection on VLAN 100 (where the DHCP server is) is unnecessary since the DHCP server is a trusted device.

Option C, "On Switch-2, enable BPDU filtering on all edge ports in order to prevent eavesdropping attacks by untrusted devices," is incorrect. BPDU filtering is used to prevent spanning tree protocol (STP) attacks by blocking BPDUs on edge ports, but it does not protect against eavesdropping or other exploits like DHCP spoofing or ARP poisoning, which are more relevant in this context.

Option D, "On Switch-1, enable DHCP snooping on VLAN 100 and ARP inspection on VLANs 15 and 25," is incorrect for the same reason as Option A. Switch-1 is not the appropriate place to enable these features since it’s not directly connected to the untrusted devices on VLANs 15 and 25.

The HPE Aruba Networking AOS-CX 10.12 Security Guide states:

"DHCP snooping should be enabled on access layer switches where untrusted end-user devices connect. It must be enabled globally and on the specific VLANs where the devices reside (e.g., dhcp-snooping vlan 15,25). This feature builds a binding table of IP-to-MAC mappings, which can be used by Dynamic ARP Inspection (DAI) to prevent ARP poisoning attacks. DAI should also be enabled on the same VLANs (e.g., ip arp inspection vlan 15,25) after DHCP snooping is configured, ensuring that ARP packets are validated against the DHCP snooping binding table." (Page 145, DHCP Snooping and ARP Inspection Section)

Additionally, the guide notes:

"Dynamic ARP Inspection (DAI) and DHCP snooping are typically configured on access layer switches to protect against exploits from untrusted devices, such as DHCP spoofing and ARP poisoning. These features should be applied to the VLANs where end-user devices connect, not on core switches unless those VLANs are directly connected to untrusted devices." (Page 146, Best Practices Section)

What is one method for HPE Aruba Networking ClearPass Policy Manager (CPPM) to use DHCP to classify an endpoint?

It can determine information such as the endpoint OS from the order of options listed in Option 55 of a DHCP Discover packet.

It can respond to a client’s DHCP Discover with different DHCP Offers and then analyze the responses to identify the client OS.

It can snoop DHCP traffic to register the clients’ IP addresses. It then knows where to direct its HTTP requests to actively probe for information about the client.

It can alter the DHCP Offer to insert itself as a proxy gateway. It will then be inline in the traffic flow and can apply traffic analytics to classify clients.

HPE Aruba Networking ClearPass Policy Manager (CPPM) uses device profiling to classify endpoints, and one of its passive profiling methods involves analyzing DHCP traffic. DHCP fingerprinting is a technique where ClearPass examines the DHCP packets sent by a client, particularly the DHCP Discover packet, to identify the device’s operating system or type based on specific attributes.

Option A, "It can determine information such as the endpoint OS from the order of options listed in Option 55 of a DHCP Discover packet," is correct. DHCP Option 55 (Parameter Request List) is a field in the DHCP Discover packet where the client specifies the list of DHCP options it requests from the server. The order and combination of these options are often unique to specific operating systems or device types (e.g., Windows, Linux, macOS, or IoT devices). ClearPass maintains a database of DHCP fingerprints and matches the Option 55 data against this database to classify the endpoint.

Option B, "It can respond to a client’s DHCP Discover with different DHCP Offers and then analyze the responses," is incorrect because ClearPass does not act as a DHCP server or send DHCP Offers. It passively snoops DHCP traffic rather than actively responding to DHCP requests.

Option C, "It can snoop DHCP traffic to register the clients’ IP addresses," is partially correct in that ClearPass does snoop DHCP traffic, but the purpose is not just to register IP addresses for HTTP probing. While ClearPass can use IP addresses for active probing (e.g., HTTP or SNMP), the question specifically asks about using DHCP to classify, which is done via fingerprinting, not IP registration.

Option D, "It can alter the DHCP Offer to insert itself as a proxy gateway," is incorrect because ClearPass does not modify DHCP packets or act as a proxy gateway. This is not a function of ClearPass in the context of DHCP-based profiling.

The HPE Aruba Networking ClearPass Policy Manager 6.11 User Guide states:

"ClearPass can profile devices using DHCP fingerprinting, a passive profiling method. When a device sends a DHCP Discover packet, ClearPass examines the packet’s attributes, including the order of options in DHCP Option 55 (Parameter Request List). The combination and order of these options are often unique to specific operating systems or device types. ClearPass matches these attributes against its DHCP fingerprint database to classify the device (e.g., identifying a device as a Windows 10 laptop or an Android phone)." (Page 247, DHCP Fingerprinting Section)

Additionally, the ClearPass Device Insight Data Sheet notes:

"DHCP fingerprinting allows ClearPass to passively collect device information without interfering with network traffic. By analyzing DHCP Option 55, ClearPass can accurately determine the device’s operating system and type, enabling precise policy enforcement." (Page 3)

You have been instructed to look in the ArubaOS Security Dashboard's client list. Your goal is to find clients that belong to the company and have connected to devices that might belong to hackers.

Which client fits this description?

MAC address: d8:50:e6:f3:70:ab; Client Classification: Interfering; AP Classification: Rogue

MAC address: d8:50:e6:f3:6e:c5; Client Classification: Interfering; AP Classification: Neighbor

MAC address: d8:50:e6:f3:6e:60; Client Classification: Interfering; AP Classification: Authorized

MAC address: d8:50:e6:f3:6d:a4; Client Classification: Authorized; AP Classification: Rogue

The ArubaOS Security Dashboard, part of the AOS-8 architecture (Mobility Controllers or Mobility Master), provides visibility into wireless clients and access points (APs) through its Wireless Intrusion Prevention (WIP) system. The goal is to identify clients that belong to the company (i.e., authorized clients) and have connected to devices that might belong to hackers (i.e., rogue APs).

Client Classification:

Authorized: A client that has successfully authenticated to an authorized AP and is recognized as part of the company’s network (e.g., an employee device).

Interfering: A client that is not authenticated to the company’s network and is considered external or potentially malicious.

AP Classification:

Authorized: An AP that is part of the company’s network and managed by the MC/MM.

Rogue: An AP that is not authorized and is suspected of being malicious (e.g., connected to the company’s wired network without permission).

Neighbor: An AP that is not part of the company’s network but is not connected to the wired network (e.g., a nearby AP from another organization).

The requirement is to find a client that is authorized (belongs to the company) and connected to a rogue AP (might belong to hackers).

Option A: MAC address: d8:50:e6:f3:70:ab; Client Classification: Interfering; AP Classification: RogueThis client is classified as "Interfering," meaning it does not belong to the company. Although it is connected to a rogue AP, it does not meet the requirement of being a company client.

Option B: MAC address: d8:50:e6:f3:6e:c5; Client Classification: Interfering; AP Classification: NeighborThis client is "Interfering" (not a company client) and connected to a "Neighbor" AP, which is not considered a hacker’s device (it’s just a nearby AP).

Option C: MAC address: d8:50:e6:f3:6e:60; Client Classification: Interfering; AP Classification: AuthorizedThis client is "Interfering" (not a company client) and connected to an "Authorized" AP, which is part of the company’s network, not a hacker’s device.

Option D: MAC address: d8:50:e6:f3:6d:a4; Client Classification: Authorized; AP Classification: RogueThis client is "Authorized," meaning it belongs to the company, and it is connected to a "Rogue" AP, which might belong to hackers. This matches the requirement perfectly.

The HPE Aruba Networking AOS-8 8.11 User Guide states:

"The Security Dashboard in ArubaOS provides a client list that includes the client classification and the AP classification for each client. A client classified as ‘Authorized’ has successfully authenticated to an authorized AP and is part of the company’s network. A ‘Rogue’ AP is an unauthorized AP that is suspected of being malicious, often because it is connected to the company’s wired network (e.g., detected via Eth-Wired-Mac-Table match). To identify potential security risks, look for authorized clients connected to rogue APs, as this may indicate that a company device has connected to a hacker’s AP." (Page 415, Security Dashboard Section)

Additionally, the HPE Aruba Networking Security Guide notes:

"An ‘Authorized’ client is one that has authenticated to an AP managed by the controller, typically an employee or corporate device. A ‘Rogue’ AP is classified as such if it is not authorized and poses a potential threat, such as being connected to the corporate LAN. Identifying authorized clients connected to rogue APs is critical for detecting potential man-in-the-middle attacks." (Page 78, WIP Classifications Section)

Your AOS solution has detected a rogue AP with Wireless Intrusion Prevention (WIP). Which information about the detected radio can best help you to locate the rogue device?

The detecting devices

The match method

The confidence level

The match type

In an HPE Aruba Networking AOS-8 solution, the Wireless Intrusion Prevention (WIP) system is used to detect and classify rogue Access Points (APs). When a rogue AP is detected, the AOS system provides various pieces of information about the detected radio, such as the SSID, BSSID, match method, match type, confidence level, and the devices that detected the rogue AP. The goal is to locate the physical rogue device, which requires identifying its approximate location in the network environment.

Option A, "The detecting devices," is correct. The "detecting devices" refer to the authorized APs or radios that detected the rogue AP’s signal. This information is critical for locating the rogue device because it provides the physical locations of the detecting APs. By knowing which APs detected the rogue AP and their signal strength (RSSI) readings, you can triangulate the approximate location of the rogue AP. For example, if AP-1 in Building A and AP-2 in Building B both detect the rogue AP, and AP-1 reports a stronger signal, the rogue AP is likely closer to AP-1 in Building A.

Option B, "The match method," is incorrect. The match method (e.g., "Plus one," "Eth-Wired-Mac-Table") indicates how the rogue AP was classified (e.g., based on a BSSID close to a known MAC or its presence on the wired network). While this helps understand why the AP was classified as rogue, it does not directly help locate the physical device.

Option C, "The confidence level," is incorrect. The confidence level indicates the likelihood that the AP is correctly classified as rogue (e.g., 90% confidence). This is useful for assessing the reliability of the classification but does not provide location information.

Option D, "The match type," is incorrect. The match type (e.g., "Rogue," "Suspected Rogue") specifies the category of the classification. Like the match method, it helps understand the classification but does not aid in physically locating the device.

The HPE Aruba Networking AOS-8 8.11 User Guide states:

"When a rogue AP is detected by the Wireless Intrusion Prevention (WIP) system, the ‘detecting devices’ information lists the authorized APs or radios that detected the rogue AP’s signal. This is the most useful information for locating the rogue device, as it provides the physical locations of the detecting APs. By analyzing the signal strength (RSSI) reported by each detecting device, you can triangulate the approximate location of the rogue AP. For example, if AP-1 and AP-2 detect the rogue AP, and AP-1 reports a higher RSSI, the rogue AP is likely closer to AP-1." (Page 416, Rogue AP Detection Section)

Additionally, the HPE Aruba Networking Security Guide notes:

"To locate a rogue AP, use the ‘detecting devices’ information in the AOS Detected Radios page. This lists the APs that detected the rogue AP, along with signal strength data, enabling triangulation to pinpoint the rogue device’s location." (Page 80, Locating Rogue APs Section)

What is a correct guideline for the management protocols that you should use on AOS-CX switches?

Make sure that SSH is disabled and use HTTPS instead.

Make sure that Telnet is disabled and use SSH instead.

Make sure that Telnet is disabled and use TFTP instead.

Make sure that HTTPS is disabled and use SSH instead.

AOS-CX switches support various management protocols for administrative access, such as SSH, Telnet, HTTPS, and TFTP. Security best practices for managing network devices, including AOS-CX switches, emphasize using secure protocols to protect management traffic from eavesdropping and unauthorized access.

Option B, "Make sure that Telnet is disabled and use SSH instead," is correct. Telnet is an insecure protocol because it sends all data, including credentials, in plaintext, making it vulnerable to eavesdropping. SSH (Secure Shell) provides encrypted communication for remote management, ensuring that credentials and commands are protected. HPE Aruba Networking recommends disabling Telnet and enabling SSH for secure management access on AOS-CX switches.

Option A, "Make sure that SSH is disabled and use HTTPS instead," is incorrect. SSH and HTTPS serve different purposes: SSH is for CLI access, while HTTPS is for web-based management. Disabling SSH would prevent secure CLI access, which is not a recommended practice. Both SSH and HTTPS should be enabled for secure management.

Option C, "Make sure that Telnet is disabled and use TFTP instead," is incorrect. TFTP (Trivial File Transfer Protocol) is used for file transfers (e.g., firmware updates), not for management access like Telnet or SSH. TFTP is also insecure (no encryption), so it’s not a suitable replacement for Telnet.

Option D, "Make sure that HTTPS is disabled and use SSH instead," is incorrect. HTTPS is used for secure web-based management and should not be disabled. Both HTTPS and SSH are secure protocols and should be used together for different management interfaces (web and CLI, respectively).

The HPE Aruba Networking AOS-CX 10.12 Security Guide states:

"For secure management of AOS-CX switches, disable insecure protocols like Telnet, which sends data in plaintext, and use SSH instead. SSH provides encrypted communication for CLI access, protecting credentials and commands from eavesdropping. Use the command no telnet-server to disable Telnet and ssh-server to enable SSH. Additionally, enable HTTPS for web-based management with https-server to ensure all management traffic is encrypted." (Page 195, Secure Management Protocols Section)

Additionally, the HPE Aruba Networking Security Best Practices Guide notes:

"A key guideline for managing AOS-CX switches is to disable Telnet and enable SSH for CLI access. Telnet is insecure and should not be used in production environments, as it transmits credentials in plaintext. SSH ensures secure remote management, and HTTPS should also be enabled for web access." (Page 25, Management Security Section)

Which is an accurate description of a type of malware?

Worms are usually delivered in spear-phishing attacks and require users to open and run a file.

Rootkits can help hackers gain elevated access to a system and often actively conceal themselves from detection.

A Trojan is any type of malware that replicates itself and spreads to other systems automatically.

Malvertising can only infect a system if the user encounters the malware on an untrustworthy site.

Malware (malicious software) is a broad category of software designed to harm or exploit systems. HPE Aruba Networking documentation often discusses malware in the context of network security threats and mitigation strategies, such as those detected by the Wireless Intrusion Prevention (WIP) system.

Option A, "Worms are usually delivered in spear-phishing attacks and require users to open and run a file," is incorrect. Worms are a type of malware that replicate and spread automatically across networks without user interaction (e.g., by exploiting vulnerabilities). They are not typically delivered via spear-phishing, which is more associated with Trojans or ransomware. Worms do not require users to open and run a file; that behavior is characteristic of Trojans.

Option B, "Rootkits can help hackers gain elevated access to a system and often actively conceal themselves from detection," is correct. A rootkit is a type of malware that provides hackers with privileged (elevated) access to a system, often by modifying the operating system or kernel. Rootkits are designed to hide their presence (e.g., by concealing processes, files, or network connections) to evade detection by antivirus software or system administrators, making them a stealthy and dangerous type of malware.

Option C, "A Trojan is any type of malware that replicates itself and spreads to other systems automatically," is incorrect. A Trojan is a type of malware that disguises itself as legitimate software to trick users into installing it. Unlike worms, Trojans do not replicate or spread automatically; they require user interaction (e.g., downloading and running a file) to infect a system.

Option D, "Malvertising can only infect a system if the user encounters the malware on an untrustworthy site," is incorrect. Malvertising (malicious advertising) involves embedding malware in online ads, which can appear on both trustworthy and untrustworthy sites. For example, a legitimate website might unknowingly serve a malicious ad that exploits a browser vulnerability to infect the user’s system, even without the user clicking the ad.

The HPE Aruba Networking Security Guide states:

"Rootkits are a type of malware that can help hackers gain elevated access to a system by modifying the operating system or kernel. They often actively conceal themselves from detection by hiding processes, files, or network connections, making them difficult to detect and remove. Rootkits are commonly used to maintain persistent access to a compromised system." (Page 22, Malware Types Section)

Additionally, the HPE Aruba Networking AOS-8 8.11 User Guide notes:

"The Wireless Intrusion Prevention (WIP) system can detect various types of malware. Rootkits, for example, are designed to provide hackers with elevated access and often conceal themselves to evade detection, allowing the hacker to maintain control over the infected system for extended periods." (Page 421, Malware Threats Section)

What is a benefit of Opportunistic Wireless Encryption (OWE)?

It allows both WPA2-capable and WPA3-capable clients to authenticate to the same WPA-Personal WLAN.

It offers more control over who can connect to the wireless network when compared with WPA2-Personal.

It allows anyone to connect, but provides better protection against eavesdropping than a traditional open network.

It provides protection for wireless clients against both honeypot APs and man-in-the-middle (MITM) attacks.

Opportunistic Wireless Encryption (OWE) is a WPA3 feature designed for open wireless networks, where no password or authentication is required to connect. OWE enhances security by providing encryption for devices that support it, without requiring a pre-shared key (PSK) or 802.1X authentication.

Option C, "It allows anyone to connect, but provides better protection against eavesdropping than a traditional open network," is correct. In a traditional open network (no encryption), all traffic is sent in plaintext, making it vulnerable to eavesdropping. OWE allows anyone to connect (as it’s an open network), but it negotiates unique encryption keys for each client using a Diffie-Hellman key exchange. This ensures that client traffic is encrypted with AES (e.g., using AES-GCMP), protecting it from eavesdropping. OWE in transition mode also supports non-OWE devices, which connect without encryption, but OWE-capable devices benefit from the added security.

Option A, "It allows both WPA2-capable and WPA3-capable clients to authenticate to the same WPA-Personal WLAN," is incorrect. OWE is for open networks, not WPA-Personal (which uses a PSK). WPA2/WPA3 transition mode (not OWE) allows both WPA2 and WPA3 clients to connect to the same WPA-Personal WLAN.

Option B, "It offers more control over who can connect to the wireless network when compared with WPA2-Personal," is incorrect. OWE is an open network protocol, meaning it offers less control over who can connect compared to WPA2-Personal, which requires a PSK for access.

Option D, "It provides protection for wireless clients against both honeypot APs and man-in-the-middle (MITM) attacks," is incorrect. OWE provides encryption to prevent eavesdropping, but it does not protect against honeypot APs (rogue APs broadcasting the same SSID) or MITM attacks, as it lacks authentication mechanisms to verify the AP’s identity. Protection against such attacks requires 802.1X authentication (e.g., WPA3-Enterprise) or other security measures.

The HPE Aruba Networking AOS-8 8.11 User Guide states:

"Opportunistic Wireless Encryption (OWE) is a WPA3 feature for open networks that allows anyone to connect without a password, but provides better protection against eavesdropping than a traditional open network. OWE uses a Diffie-Hellman key exchange to negotiate unique encryption keys for each client, ensuring that traffic is encrypted with AES-GCMP and protected from unauthorized interception." (Page 290, OWE Overview Section)

Additionally, the HPE Aruba Networking Wireless Security Guide notes:

"OWE enhances security for open WLANs by providing encryption without requiring authentication. It allows any device to connect, but OWE-capable devices benefit from encrypted traffic, offering better protection against eavesdropping compared to a traditional open network where all traffic is sent in plaintext." (Page 35, OWE Benefits Section)

What is one difference between EAP-Tunneled Layer security (EAP-TLS) and Protected EAP (PEAP)?

EAP-TLS creates a TLS tunnel for transmitting user credentials, while PEAP authenticates the server and supplicant during a TLS handshake.

EAP-TLS requires the supplicant to authenticate with a certificate, hut PEAP allows the supplicant to use a username and password.

EAP-TLS begins with the establishment of a TLS tunnel, but PEAP does not use a TLS tunnel as part of Its process

EAP-TLS creates a TLS tunnel for transmitting user credentials securely while PEAP protects user credentials with TKIP encryption.

EAP-TLS and PEAP both provide secure authentication methods, but they differ in their requirements for client-side authentication. EAP-TLS requires both the client (supplicant) and the server to authenticate each other with certificates, thereby ensuring a very high level of security. On the other hand, PEAP requires a server-side certificate to create a secure tunnel and allows the client to authenticate using less stringent methods, such as a username and password, which are then protected by the tunnel. This makes PEAP more flexible in environments where client-side certificates are not feasible.

A user is having trouble connecting to an AP managed by a standalone Mobility Controller (MC). What can you do to get detailed logs and debugs for that user's client?

In the MC CLI, set up a control plane packet capture and filter for the client's IP address.

In the MC CLI, set up a data plane packet capture and filter for the client's MAC address.

In the MC UI’s Traffic Analytics dashboard, look for the client's IP address.

In the MC UI’s Diagnostics > Logs pages, add a "user-debug" log setting for the client's MAC address.

When troubleshooting connectivity issues for a user connecting to an AP managed by a standalone Mobility Controller (MC) in an AOS-8 architecture, detailed logs and debugs specific to the user’s client are essential. The MC provides several tools for capturing logs and debugging information, including packet captures and user-specific debug logs.

Option D, "In the MC UI’s Diagnostics > Logs pages, add a ‘user-debug’ log setting for the client's MAC address," is correct. The "user-debug" feature in the MC allows administrators to enable detailed debugging for a specific client by specifying the client’s MAC address. This generates logs related to the client’s authentication, association, role assignment, and other activities, which are critical for troubleshooting connectivity issues. The Diagnostics > Logs pages in the MC UI provide a user-friendly way to configure this setting and view the resulting logs.

Option A, "In the MC CLI, set up a control plane packet capture and filter for the client's IP address," is incorrect because control plane packet captures are used to capture management traffic (e.g., between the MC and APs or other controllers), not user traffic. Additionally, the client may not yet have an IP address if connectivity is failing, making an IP-based filter less effective.

Option B, "In the MC CLI, set up a data plane packet capture and filter for the client's MAC address," is a valid troubleshooting method but is not the best choice for getting detailed logs. Data plane packet captures are useful for analyzing user traffic (e.g., to see if packets are being dropped), but they do not provide the same level of detailed logging as the "user-debug" feature, which includes authentication and association events.

Option C, "In the MC UI’s Traffic Analytics dashboard, look for the client's IP address," is incorrect because the Traffic Analytics dashboard is used for monitoring application usage and traffic patterns, not for detailed troubleshooting of a specific client’s connectivity issues. Additionally, if the client cannot connect, it may not have an IP address or generate traffic visible in the dashboard.

The HPE Aruba Networking AOS-8 8.11 User Guide states:

"To troubleshoot issues for a specific wireless client, you can enable user-specific debugging using the ‘user-debug’ feature. In the Mobility Controller UI, navigate to Diagnostics > Logs, and add a ‘user-debug’ log setting for the client’s MAC address. This will generate detailed logs for the client, including authentication, association, and role assignment events, which can be viewed in the Logs page. For example, to enable user-debug for a client with MAC address 00:11:22:33:44:55, add the setting ‘user-debug 00:11:22:33:44:55’." (Page 512, Troubleshooting Wireless Clients Section)

Additionally, the guide notes:

"While packet captures (control plane or data plane) can be useful for analyzing traffic, the ‘user-debug’ feature provides more detailed logs for troubleshooting client-specific issues, such as failed authentication or association problems." (Page 513, Debugging Tools Section)

A company has an AOS controller-based solution with a WPA3-Enterprise WLAN, which authenticates wireless clients to HPE Aruba Networking ClearPass Policy Manager (CPPM). The company has decided to use digital certificates for authentication. A user's Windows domain computer has had certificates installed on it. However, the Networks and Connections window shows that authentication has failed for the user. The Mobility Controller’s (MC's) RADIUS events show that it is receiving Access-Rejects for the authentication attempt.

What is one place that you can look for deeper insight into why this authentication attempt is failing?

The reports generated by HPE Aruba Networking ClearPass Insight

The RADIUS events within the CPPM Event Viewer

The Alerts tab in the authentication record in CPPM Access Tracker

The packets captured on the MC control plane destined to UDP 1812

The scenario involves an AOS-8 controller-based solution with a WPA3-Enterprise WLAN using HPE Aruba Networking ClearPass Policy Manager (CPPM) for authentication. The company is using digital certificates for authentication (likely EAP-TLS, as it’s the most common certificate-based method for WPA3-Enterprise). A user’s Windows domain computer has certificates installed, but authentication fails. The Mobility Controller (MC) logs show Access-Rejects from CPPM, indicating that CPPM rejected the authentication attempt.

Access-Reject: An Access-Reject message from CPPM means that the authentication failed due to a policy violation, certificate issue, or other configuration mismatch. To troubleshoot, we need to find detailed information about why CPPM rejected the request.

Option C, "The Alerts tab in the authentication record in CPPM Access Tracker," is correct. Access Tracker in CPPM logs all authentication attempts, including successful and failed ones. For a failed attempt (Access-Reject), the authentication record in Access Tracker will include an Alerts tab that provides detailed reasons for the failure. For example, if the client’s certificate is invalid (e.g., expired, not trusted, or missing a required attribute), or if the user does not match a policy in CPPM, the Alerts tab will specify the exact issue (e.g., "Certificate not trusted," "User not found in directory").

Option A, "The reports generated by HPE Aruba Networking ClearPass Insight," is incorrect. ClearPass Insight is used for generating reports and analytics (e.g., trends, usage patterns), not for real-time troubleshooting of specific authentication failures.

Option B, "The RADIUS events within the CPPM Event Viewer," is incorrect. The Event Viewer logs system-level events (e.g., service crashes, NAD mismatches), not detailed authentication failure reasons. While it might log that an Access-Reject was sent, it won’t provide the specific reason for the rejection.

Option D, "The packets captured on the MC control plane destined to UDP 1812," is incorrect. Capturing packets on the MC control plane for UDP 1812 (RADIUS authentication port) can show the RADIUS exchange, but it won’t provide the detailed reason for the Access-Reject. The MC logs already show the Access-Reject, so the issue lies on the CPPM side, and Access Tracker provides more insight.

The HPE Aruba Networking ClearPass Policy Manager 6.11 User Guide states:

"Access Tracker (Monitoring > Live Monitoring > Access Tracker) logs all authentication attempts, including failed ones. For an Access-Reject, the authentication record in Access Tracker includes an Alerts tab that provides detailed reasons for the failure. For example, in a certificate-based authentication (e.g., EAP-TLS), the Alerts tab might show ‘Certificate not trusted’ if the client’s certificate is not trusted by ClearPass, or ‘User not found’ if the user does not match a policy. This is the primary place to look for deeper insight into authentication failures." (Page 299, Access Tracker Troubleshooting Section)

Additionally, the HPE Aruba Networking AOS-8 8.11 User Guide notes:

"If the Mobility Controller logs show an Access-Reject from the RADIUS server (e.g., ClearPass), check the RADIUS server’s authentication logs for details. In ClearPass, the Access Tracker provides detailed failure reasons in the Alerts tab of the authentication record, such as certificate issues or policy mismatches." (Page 500, Troubleshooting 802.1X Authentication Section)

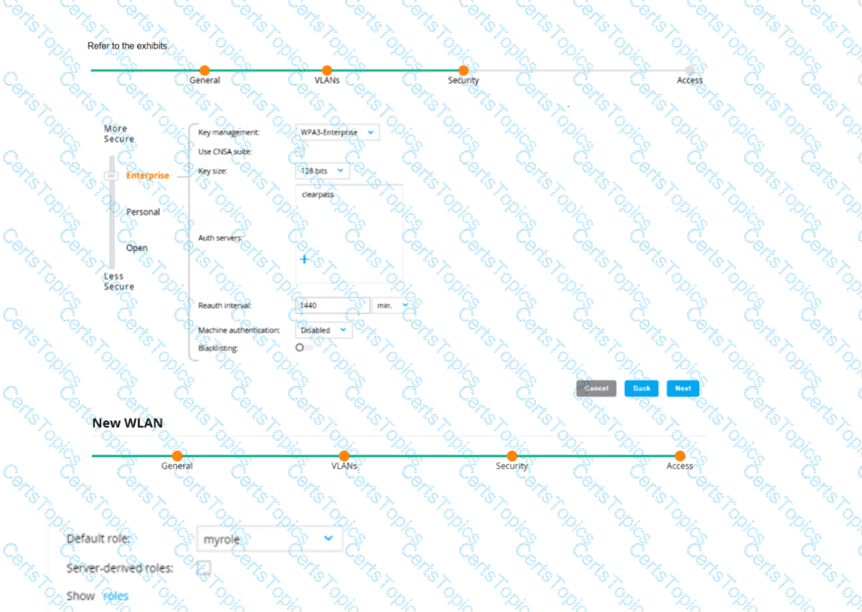

Refer to the exhibit.

How can you use the thumbprint?

Install this thumbprint on management stations to use as two-factor authentication along with manager usernames and passwords, this will ensure managers connect from valid stations

Copy the thumbprint to other Aruba switches to establish a consistent SSH Key for all switches this will enable managers to connect to the switches securely with less effort

When you first connect to the switch with SSH from a management station, make sure that the thumbprint matches to ensure that a man-in-t he-mid die (MITM) attack is not occurring

install this thumbprint on management stations the stations can then authenticate with the thumbprint instead of admins having to enter usernames and passwords.

The thumbprint (also known as a fingerprint) of a certificate or SSH key is a hash that uniquely represents the public key contained within. When you first connect to the switch with SSH from a management station, you should ensure that the thumbprint matches what you expect. This is a security measure to confirm the identity of the device you are connecting to and to ensure that a man-in-the-middle (MITM) attack is not occurring. If the thumbprint matches the known good thumbprint of the switch, it is safe to proceed with the connection.

You have an Aruba Mobility Controller (MC) that is locked in a closet. What is another step that Aruba recommends to protect the MC from unauthorized access?

Use local authentication rather than external authentication to authenticate admins.

Change the password recovery password.

Set the local admin password to a long random value that is unknown or locked up securely.

Disable local authentication of administrators entirely.

Protecting an Aruba Mobility Controller from unauthorized access involves several layers of security. One recommendation is to change the password recovery password, which is a special type of password used to recover access to the device in the event the admin password is lost. Changing this to something complex and unique adds an additional layer of security in the event the physical security of the device is compromised.

You are troubleshooting an authentication issue for HPE Aruba Networking switches that enforce 802.1X to a cluster of HPE Aruba Networking ClearPass Policy Manager (CPPMs). You know that CPPM is receiving and processing the authentication requests because the Aruba switches are showing Access-Rejects in their statistics. However, you cannot find the record for the Access-Rejects in CPPM Access Tracker.

What is something you can do to look for the records?

Go to the CPPM Event Viewer, because this is where RADIUS Access Rejects are stored.

Verify that you are logged in to the CPPM UI with read-write, not read-only, access.

Make sure that CPPM cluster settings are configured to show Access-Rejects.

Click Edit in Access Viewer and make sure that the correct servers are selected.

The scenario involves troubleshooting an 802.1X authentication issue on HPE Aruba Networking switches (likely AOS-CX switches) that use a cluster of HPE Aruba Networking ClearPass Policy Manager (CPPM) servers as the RADIUS server. The switches show Access-Rejects in their statistics, indicating that CPPM is receiving and processing the authentication requests but rejecting them. However, the records for these Access-Rejects are not visible in CPPM Access Tracker.

Access Tracker: Access Tracker (Monitoring > Live Monitoring > Access Tracker) in CPPM logs all authentication attempts, including successful (Access-Accept) and failed (Access-Reject) requests. If an Access-Reject is not visible in Access Tracker, it suggests that the request was processed at a lower level and not logged in Access Tracker, or there is a visibility issue (e.g., filtering, clustering).

Option A, "Go to the CPPM Event Viewer, because this is where RADIUS Access Rejects are stored," is correct. The Event Viewer (Monitoring > Event Viewer) in CPPM logs system-level events, including RADIUS-related events that might not appear in Access Tracker. For example, if the Access-Reject is due to a configuration issue (e.g., the switch’s IP address is not recognized as a Network Access Device, NAD, or the shared secret is incorrect), the request may be rejected before it is logged in Access Tracker, and the Event Viewer will capture this event (e.g., "RADIUS authentication attempt from unknown NAD"). Since the switches confirm that CPPM is sending Access-Rejects, the Event Viewer is a good place to look for more details.

Option B, "Verify that you are logged in to the CPPM UI with read-write, not read-only, access," is incorrect. Access Tracker visibility is not dependent on read-write vs. read-only access. Both types of accounts can view Access Tracker records, though read-only accounts cannot modify configurations. The issue is that the records are not appearing, not that the user lacks permission to see them.

Option C, "Make sure that CPPM cluster settings are configured to show Access-Rejects," is incorrect. In a CPPM cluster, Access Tracker records are synchronized across nodes, and there is no specific cluster setting to "show Access-Rejects." Access Tracker logs all authentication attempts by default, unless filtered out (e.g., by time range or search criteria), but the issue here is that the records are not appearing at all.

Option D, "Click Edit in Access Viewer and make sure that the correct servers are selected," is incorrect. Access Tracker (not "Access Viewer") does not have an "Edit" option to select servers. In a CPPM cluster, Access Tracker shows records from all nodes by default, and the user can filter by time, NAD, or other criteria, but the absence of records suggests a deeper issue (e.g., the request was rejected before logging in Access Tracker).

The HPE Aruba Networking ClearPass Policy Manager 6.11 User Guide states:

"If an Access-Reject is not visible in Access Tracker, it may indicate that the RADIUS request was rejected at a low level before being logged. The Event Viewer (Monitoring > Event Viewer) logs system-level events, including RADIUS Access-Rejects that do not appear in Access Tracker. For example, if the request is rejected due to an unknown NAD or shared secret mismatch, the Event Viewer will log an event like ‘RADIUS authentication attempt from unknown NAD,’ providing insight into the rejection." (Page 301, Troubleshooting RADIUS Issues Section)

Additionally, the HPE Aruba Networking AOS-CX 10.12 Security Guide notes:

"When troubleshooting 802.1X authentication issues, if the switch logs show Access-Rejects from the RADIUS server (e.g., ClearPass) but the records are not visible in Access Tracker, check the RADIUS server’s system logs. In ClearPass, the Event Viewer logs RADIUS Access-Rejects that may not appear in Access Tracker, such as those caused by NAD configuration issues." (Page 150, Troubleshooting 802.1X Authentication Section)

What is a guideline for creating certificate signing requests (CSRs) and deploying server Certificates on ArubaOS Mobility Controllers (MCs)?

Create the CSR online using the MC Web Ul if your company requires you to archive the private key.

if you create the CSR and public/private Keypair offline, create a matching private key online on the MC.

Create the CSR and public/private keypair offline If you want to install the same certificate on multiple MCs.

Generate the private key online, but the public key and CSR offline, to install the same certificate on multiple MCs.

Creating the Certificate Signing Request (CSR) and the public/private keypair offline is recommended when deploying server certificates on multiple ArubaOS Mobility Controllers (MCs). This method enhances security by minimizing the exposure of private keys. By creating and handling these components offline, administrators can maintain better control over the keys and ensure their security before deploying them across multiple devices. This approach also simplifies the management of certificates on multiple controllers, as the same certificate can be installed more securely and efficiently.

How can ARP be used to launch attacks?

Hackers can use ARP to change their NIC's MAC address so they can impersonate legiti-mate users.

Hackers can exploit the fact that the port used for ARP must remain open and thereby gain remote access to another user's device.

A hacker can use ARP to claim ownership of a CA-signed certificate that actually belongs to another device.

A hacker can send gratuitous ARP messages with the default gateway IP to cause devices to redirect traffic to the hacker's MAC address.

ARP (Address Resolution Protocol) can indeed be exploited to conduct various types of attacks, most notably ARP spoofing/poisoning. Gratuitous ARP is a special kind of ARP message which is used by an IP node to announce or update its IP to MAC mapping to the entire network. A hacker can abuse this by sending out gratuitous ARP messages pretending to associate the IP address of the router (default gateway) with their own MAC address. This results in traffic that was supposed to go to the router being sent to the attacker instead, thus potentially enabling the attacker to intercept, modify, or block traffic.

What is a benefit of Protected Management Frames (PMF). sometimes called Management Frame Protection (MFP)?

PMF helps to protect APs and MCs from unauthorized management access by hackers.

PMF ensures trial traffic between APs and Mobility Controllers (MCs) is encrypted.

PMF prevents hackers from capturing the traffic between APs and Mobility Controllers.

PMF protects clients from DoS attacks based on forged de-authentication frames

Protected Management Frames (PMF), also known as Management Frame Protection (MFP), is designed to protect clients from denial-of-service (DoS) attacks that involve forged de-authentication and disassociation frames. These attacks can disconnect legitimate clients from the network. PMF provides a way to authenticate these management frames, ensuring that they are not forged, thus enhancing the security of the wireless network.

What is one way that WPA3-PerSonal enhances security when compared to WPA2-Personal?

WPA3-Perscn3i is more secure against password leaking Because all users nave their own username and password

WPA3-Personai prevents eavesdropping on other users' wireless traffic by a user who knows the passphrase for the WLAN.

WPA3-Personai is more resistant to passphrase cracking Because it requires passphrases to be at least 12 characters

WPA3-Personal is more complicated to deploy because it requires a backend authentication server

WPA3-Personal enhances security over WPA2-Personal by implementing individualized data encryption. This feature, known as Wi-Fi Enhanced Open, provides each user's session with a unique encryption key, even if they are using the same network passphrase. This prevents an authenticated user from eavesdropping on the traffic of other users on the same network, thus enhancing privacy and security.

You have a network with ArubaOS-Switches for which Aruba ClearPass Policy Manager (CPPM) is acting as a TACACS+ server to authenticate managers. CPPM assigns the admins a TACACS+ privilege level, either manager or operator. You are now adding ArubaOS-CX switches to the network. ClearPass admins want to use the same CPPM service and policies to authenticate managers on the new switches.

What should you explain?

This approach cannot work because the ArubaOS-CX switches do not accept standard TACACS+ privilege levels.

This approach cannot work because the ArubaOS-CX switches do not support TACACS+.

This approach will work, but will need to be adjusted later if you want to assign managers to the default auditors group.

This approach will work to assign admins to the default "administrators" group, but not to the default "operators" group.

With ArubaOS-CX switches, the use of ClearPass Policy Manager (CPPM) as a TACACS+ server for authentication is supported. The privilege levels assigned by CPPM will translate onto the switches, where the "manager" privilege level typically maps to administrative capabilities and the "operator" privilege level maps to more limited capabilities. ArubaOS-CX does support standard TACACS+ privilege levels, so administrators can be assigned appropriately. If the ClearPass policies are correctly configured, they will work for both ArubaOS-Switches and ArubaOS-CX switches. The distinction between the "administrators" and "operators" groups is inherent in the ArubaOS-CX role-based access control, and these default groups need to be appropriately mapped to the TACACS+ privilege levels assigned by CPPM.

What is one way a noneypot can be used to launch a man-in-the-middle (MITM) attack to wireless clients?

it uses a combination or software and hardware to jam the RF band and prevent the client from connecting to any wireless networks

it runs an NMap scan on the wireless client to And the clients MAC and IP address. The hacker then connects to another network and spoofs those addresses.

it examines wireless clients' probes and broadcasts the SSlDs in the probes, so that wireless clients will connect to it automatically.

it uses ARP poisoning to disconnect wireless clients from the legitimate wireless network and force clients to connect to the hacker's wireless network instead.

A honeypot can be used to launch a Man-in-the-Middle (MITM) attack on wireless clients by examining wireless clients' probe requests and then broadcasting the SSIDs in those probes. Clients with those SSIDs in their preferred network list may then automatically connect to the honeypot, believing it to be a legitimate network. Once the client is connected to the attacker's honeypot, the attacker can intercept, monitor, or manipulate the client's traffic, effectively executing a MITM attack.

Refer to the exhibit.

This Aruba Mobility Controller (MC) should authenticate managers who access the Web Ul to ClearPass Policy Manager (CPPM) ClearPass admins have asked you to use RADIUS and explained that the MC should accept managers' roles in Aruba-Admin-Role VSAs

Which setting should you change to follow Aruba best security practices?

Change the local user role to read-only

Clear the MSCHAP check box

Disable local authentication

Change the default role to "guest-provisioning"

For following Aruba best security practices, the setting you should change is to disable local authentication. When integrating with an external RADIUS server like ClearPass Policy Manager (CPPM) for authenticating administrative access to the Mobility Controller (MC), it is a best practice to rely on the external server rather than the local user database. This practice not only centralizes the management of user roles and access but also enhances security by leveraging CPPM's advanced authentication mechanisms.

You need to implement a WPA3-Enterprise network that can also support WPA2-Enterprise clients. What is a valid configuration for the WPA3-Enterprise WLAN?

CNSA mode disabled with 256-bit keys

CNSA mode disabled with 128-bit keys

CNSA mode enabled with 256-bit keys

CNSA mode enabled with 128-bit keys

In an Aruba network, when setting up a WPA3-Enterprise network that also supports WPA2-Enterprise clients, you would typically configure the network to operate in a transitional mode that supports both protocols. CNSA (Commercial National Security Algorithm) mode is intended for networks that require higher security standards as specified by the US National Security Agency (NSA). However, for compatibility with WPA2 clients, which do not support CNSA requirements, you would disable CNSA mode. WPA3 can use 256-bit encryption keys, which offer a higher level of security than the 128-bit keys used in WPA2.

An admin has created a WLAN that uses the settings shown in the exhibits (and has not otherwise adjusted the settings in the AAA profile) A client connects to the WLAN Under which circumstances will a client receive the default role assignment?

The client has attempted 802 1X authentication, but the MC could not contact the authentication server

The client has attempted 802 1X authentication, but failed to maintain a reliable connection, leading to a timeout error

The client has passed 802 1X authentication, and the value in the Aruba-User-Role VSA matches a role on the MC

The client has passed 802 1X authentication and the authentication server did not send an Aruba-User-Role VSA

In the context of an Aruba Mobility Controller (MC) configuration, a client will receive the default role assignment if they have passed 802.1X authentication and the authentication server did not send an Aruba-User-Role Vendor Specific Attribute (VSA). The default role is assigned by the MC when a client successfully authenticates but the authentication server provides no specific role instruction. This behavior ensures that a client is not left without any role assignment, which could potentially lead to a lack of network access or access control. This default role assignment mechanism is part of Aruba's role-based access control, as documented in the ArubaOS user guide and best practices.

Which endpoint classification capabilities do Aruba network infrastructure devices have on their own without ClearPass solutions?

ArubaOS-CX switches can use a combination of active and passive methods to assign roles to clients.

ArubaOS devices (controllers and lAPs) can use DHCP fingerprints to assign roles to clients.

ArubaOS devices can use a combination of DHCP fingerprints, HTTP User-Agent strings, and Nmap to construct endpoint profiles.

ArubaOS-Switches can use DHCP fingerprints to construct detailed endpoint profiles.

Without the integration of Aruba ClearPass or other advanced network access control solutions, ArubaOS devices (controllers and Instant APs) are able to use DHCP fingerprinting to assign roles to clients. This method allows the devices to identify the type of client devices connecting to the network based on the DHCP requests they send. While this is a more basic form of endpoint classification compared to the capabilities provided by ClearPass, it still enables some level of access control based on device type. This functionality and its limitations are described in Aruba's product documentation for ArubaOS devices, highlighting the benefits of integrating a full-featured solution like ClearPass for more granular and powerful endpoint classification capabilities.

You have enabled 802.1X authentication on an AOS-CX switch, including on port 1/1/1. That port has these port-access roles configured on it:

Fallback role = roleA

Auth role = roleB

Critical role = roleCNo other port-access roles are configured on the port. A client connects to that port. The user succeeds authentication, and CPPM does not send an Aruba-User-Role VSA.What role does the client receive?

The client receives roleC.

The client is denied access.

The client receives roleB.

The client receives roleA.

In an AOS-CX switch environment, 802.1X authentication is used to authenticate clients connecting to ports, and roles are assigned based on the authentication outcome and configuration. The roles mentioned in the question—fallback, auth, and critical—have specific purposes in the AOS-CX port-access configuration:

Auth role (roleB): This role is applied when a client successfully authenticates via 802.1X and no specific role is assigned by the RADIUS server (e.g., via an Aruba-User-Role VSA). It is the default role for successful authentication.

Fallback role (roleA): This role is applied when no authentication method is attempted (e.g., the client does not support 802.1X or MAC authentication and no other method is configured).

Critical role (roleC): This role is applied when the switch cannot contact the RADIUS server (e.g., during a server timeout or failure), allowing the client to have limited access in a "critical" state.

In this scenario, the client successfully authenticates via 802.1X, and CPPM does not send an Aruba-User-Role VSA. Since authentication is successful, the switch applies the auth role (roleB) as the default role for successful authentication when no specific role is provided by the RADIUS server.

Option A, "The client receives roleC," is incorrect because the critical role is only applied when the RADIUS server is unreachable, which is not the case here since authentication succeeded.

Option B, "The client is denied access," is incorrect because the client successfully authenticated, so access is granted with the appropriate role.

Option D, "The client receives roleA," is incorrect because the fallback role is applied only when no authentication is attempted, not when authentication succeeds.

The HPE Aruba Networking AOS-CX 10.12 Security Guide states:

"When a client successfully authenticates using 802.1X, the switch assigns the client to the auth role configured for the port, unless the RADIUS server specifies a different role via the Aruba-User-Role VSA. If no Aruba-User-Role VSA is present in the Access-Accept message, the auth role is applied." (Page 132, 802.1X Authentication Section)

Additionally, the guide clarifies the roles:

"Auth role: Applied after successful 802.1X or MAC authentication if no role is specified by the RADIUS server."

"Fallback role: Applied when no authentication method is attempted."

"Critical role: Applied when the RADIUS server is unavailable." (Page 134, Port-Access Roles Section)

The monitoring admin has asked you to set up an AOS-CX switch to meet these criteria:

Send logs to a SIEM Syslog server at 10.4.13.15 at the standard TCP port (514)

Send a log for all events at the "warning" level or above; do not send logs with a lower level than "warning"The switch did not have any "logging" configuration on it. You then entered this command:AOS-CX(config)# logging 10.4.13.15 tcp vrf defaultWhat should you do to finish configuring to the requirements?

Specify the "warning" severity level for the logging server.

Add logging categories at the global level.

Ask for the Syslog password and configure it on the switch.

Configure logging as a debug destination.

The task is to configure an AOS-CX switch to send logs to a SIEM Syslog server at IP address 10.4.13.15 using TCP port 514, with logs for events at the "warning" severity level or above (i.e., warning, error, critical, alert, emergency). The initial command entered is:

AOS-CX(config)# logging 10.4.13.15 tcp vrf default

This command configures the switch to send logs to the Syslog server at 10.4.13.15 using TCP (port 514 is the default for TCP Syslog unless specified otherwise) and the default VRF. However, this command alone does not specify the severity level of the logs to be sent, which is a requirement of the task.

Severity Level Configuration: AOS-CX switches allow you to specify the severity level for logs sent to a Syslog server. The severity levels, in increasing order of severity, are: debug, informational, notice, warning, error, critical, alert, and emergency. The requirement is to send logs at the "warning" level or above, meaning warning, error, critical, alert, and emergency logs should be sent, but debug, informational, and notice logs should not.

Option A, "Specify the ‘warning’ severity level for the logging server," is correct. To meet the requirement, you need to add the severity level to the logging configuration for the specific Syslog server. The command to do this is:

AOS-CX(config)# logging 10.4.13.15 severity warning