Which partition assignment minimizes partition movements between two assignments?

Which statement is true about how exactly-once semantics (EOS) work in Kafka Streams?

Clients that connect to a Kafka cluster are required to specify one or more brokers in the bootstrap.servers parameter.

What is the primary advantage of specifying more than one broker?

Which two statements about Kafka Connect Single Message Transforms (SMTs) are correct?

(Select two.)

You need to explain the best reason to implement the consumer callback interface ConsumerRebalanceListener prior to a Consumer Group Rebalance.

Which statement is correct?

You need to set alerts on key broker metrics to trigger notifications when the cluster is unhealthy.

Which are three minimum broker metrics to monitor?

(Select three.)

A producer is configured with the default partitioner. It is sending records to a topic that is configured with five partitions. The record does not contain any key.

What is the result of this?

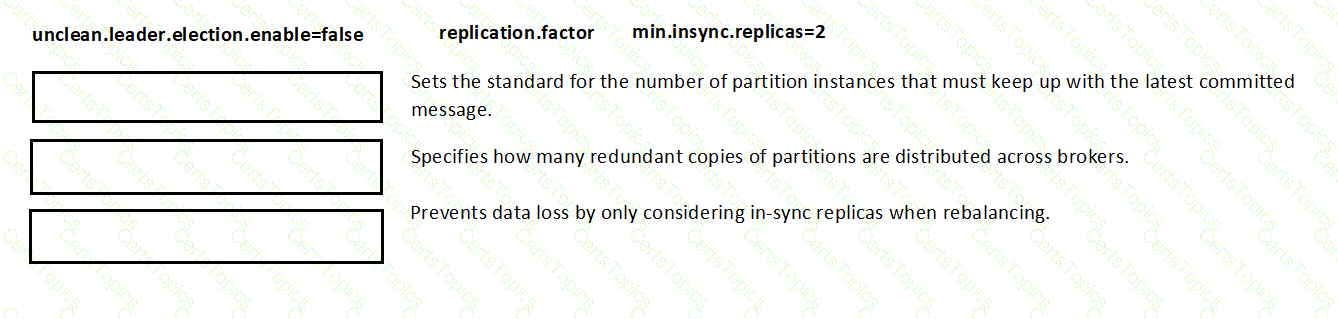

Match the topic configuration setting with the reason the setting affects topic durability.

(You are given settings like unclean.leader.election.enable=false, replication.factor, min.insync.replicas=2)

Which two producer exceptions are examples of the class RetriableException? (Select two.)

Which two statements are correct when assigning partitions to the consumers in a consumer group using the assign() API?

(Select two.)

What is the default maximum size of a message the Apache Kafka broker can accept?

Where are source connector offsets stored?

A stream processing application is consuming from a topic with five partitions. You run three instances of the application. Each instance has num.stream.threads=5.

You need to identify the number of stream tasks that will be created and how many will actively consume messages from the input topic.

Which statement describes the storage location for a sink connector’s offsets?

Which two producer exceptions are examples of the class RetriableException? (Select two.)

This schema excerpt is an example of which schema format?

package com.mycorp.mynamespace;

message SampleRecord {

int32 Stock = 1;

double Price = 2;

string Product_Name = 3;

}

You are writing a producer application and need to ensure proper delivery. You configure the producer with acks=all.

Which two actions should you take to ensure proper error handling?

(Select two.)

What is a consequence of increasing the number of partitions in an existing Kafka topic?