Which type of virtualization provides containerization and uses a microservices architecture?

hardware-assisted virtualization

OS-level virtualization

full virtualization

paravirtualization

Virtualization technologies enable the creation of isolated environments for running applications or services. Let’s analyze each option:

A. hardware-assisted virtualization

Incorrect: Hardware-assisted virtualization (e.g., Intel VT-x, AMD-V) provides support for running full virtual machines (VMs) on physical hardware. It is not related to containerization or microservices architecture.

B. OS-level virtualization

Correct: OS-level virtualization enables containerization , where multiple isolated user-space instances (containers) run on a single operating system kernel. Containers are lightweight and share the host OS kernel, making them ideal for microservices architectures. Examples include Docker and Kubernetes.

C. full virtualization

Incorrect: Full virtualization involves running a complete guest operating system on top of a hypervisor (e.g., VMware ESXi, KVM). While it provides strong isolation, it is not as lightweight or efficient as containerization for microservices.

D. paravirtualization

Incorrect: Paravirtualization involves modifying the guest operating system to communicate directly with the hypervisor. Like full virtualization, it is used for running VMs, not containers.

Why OS-Level Virtualization?

Containerization: OS-level virtualization creates isolated environments (containers) that share the host OS kernel but have their own file systems, libraries, and configurations.

Microservices Architecture: Containers are well-suited for deploying microservices because they are lightweight, portable, and scalable.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding virtualization technologies, including OS-level virtualization. Containerization is a key component of modern cloud-native architectures, enabling efficient deployment of microservices.

For example, Juniper Contrail integrates with Kubernetes to manage containerized workloads in cloud environments. OS-level virtualization is fundamental to this integration.

What are two Kubernetes worker node components? (Choose two.)

kube-apiserver

kubelet

kube-scheduler

kube-proxy

Kubernetes worker nodes are responsible for running containerized applications and managing the workloads assigned to them. Each worker node contains several key components that enable it to function within a Kubernetes cluster. Let’s analyze each option:

A. kube-apiserver

Incorrect: The kube-apiserver is a control plane component, not a worker node component. It serves as the front-end for the Kubernetes API, handling communication between the control plane and worker nodes.

B. kubelet

Correct: The kubelet is a critical worker node component. It ensures that containers are running in the desired state by interacting with the container runtime (e.g., containerd). It communicates with the control plane to receive instructions and report the status of pods.

C. kube-scheduler

Incorrect: The kube-scheduler is a control plane component responsible for assigning pods to worker nodes based on resource availability and other constraints. It does not run on worker nodes.

D. kube-proxy

Correct: The kube-proxy is another essential worker node component. It manages network communication for services and pods by implementing load balancing and routing rules. It ensures that traffic is correctly forwarded to the appropriate pods.

Why These Components?

kubelet: Ensures that containers are running as expected and maintains the desired state of pods.

kube-proxy: Handles networking and enables communication between services and pods within the cluster.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes architecture, including the roles of worker node components. Understanding the functions of kubelet and kube-proxy is crucial for managing Kubernetes clusters and troubleshooting issues.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking and security features. Proficiency with worker node components ensures efficient operation of containerized workloads.

You want to create a template that defines the CPU, RAM, and disk space properties that a VM will use when instantiated.

In this scenario, which OpenStack object should you create?

role

Image

project

flavor

In OpenStack, aflavordefines the compute, memory, and storage properties of a virtual machine (VM) instance. Let’s analyze each option:

A. role

Incorrect:Aroledefines permissions and access levels for users within a project. It is unrelated to defining VM properties.

B. Image

Incorrect:Animageis a template used to create VM instances. While images define the operating system and initial configuration, they do not specify CPU, RAM, or disk space properties.

C. project

Incorrect:Aproject(or tenant) represents an isolated environment for managing resources. It does not define the properties of individual VMs.

D. flavor

Correct:Aflavorspecifies the CPU, RAM, and disk space properties that a VM will use when instantiated. For example, a flavor might define a VM with 2 vCPUs, 4 GB of RAM, and 20 GB of disk space.

Why Flavor?

Resource Specification:Flavors allow administrators to define standardized resource templates for VMs, ensuring consistency and simplifying resource allocation.

Flexibility:Users can select the appropriate flavor based on their workload requirements, making it easy to deploy VMs with predefined configurations.

JNCIA Cloud References:

The JNCIA-Cloud certification covers OpenStack concepts, including flavors, as part of its cloud infrastructure curriculum. Understanding how flavors define VM properties is essential for managing compute resources effectively.

For example, Juniper Contrail integrates with OpenStack Nova to provide advanced networking features for VMs deployed using specific flavors.

What is the name of the Docker container runtime?

docker_cli

containerd

dockerd

cri-o

Docker is a popular containerization platform that relies on a container runtime to manage the lifecycle of containers. The container runtime is responsible for tasks such as creating, starting, stopping, and managing containers. Let’s analyze each option:

A. docker_cli

Incorrect: The Docker CLI (Command Line Interface) is a tool used to interact with the Docker daemon (dockerd). It is not a container runtime but rather a user interface for managing Docker containers.

B. containerd

Correct: containerd is the default container runtime used by Docker. It is a lightweight, industry-standard runtime that handles low-level container management tasks, such as image transfer, container execution, and lifecycle management. Docker delegates these tasks to containerd through the Docker daemon.

C. dockerd

Incorrect: dockerd is the Docker daemon, which manages Docker objects such as images, containers, networks, and volumes. While dockerd interacts with the container runtime, it is not the runtime itself.

D. cri-o

Incorrect: cri-o is an alternative container runtime designed specifically for Kubernetes. It implements the Kubernetes Container Runtime Interface (CRI) and is not used by Docker.

Why containerd?

Industry Standard: containerd is a widely adopted container runtime that adheres to the Open Container Initiative (OCI) standards.

Integration with Docker: Docker uses containerd as its default runtime, making it the correct answer in this context.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding containerization technologies and their components. Docker and its runtime (containerd) are foundational tools in modern cloud environments, enabling lightweight, portable, and scalable application deployment.

For example, Juniper Contrail integrates with container orchestration platforms like Kubernetes, which often use containerd as the underlying runtime. Understanding container runtimes is essential for managing containerized workloads in cloud environments.

Click the Exhibit button.

You apply the manifest file shown in the exhibit.

Which two statements are correct in this scenario? (Choose two.)

The created pods are receiving traffic on port 80.

This manifest is used to create a deployment.

This manifest is used to create a deploymentConfig.

Four pods are created as a result of applying this manifest.

The provided YAML manifest defines a Kubernetes Deployment object that creates and manages a set of pods running the NGINX web server. Let’s analyze each statement in detail:

A. The created pods are receiving traffic on port 80.

Correct:

The containerPort: 80 field in the manifest specifies that the NGINX container listens on port 80 for incoming traffic.

While this does not expose the pods externally, it ensures that the application inside the pod (NGINX) is configured to receive traffic on port 80.

B. This manifest is used to create a deployment.

Correct:

The kind: Deployment field explicitly indicates that this manifest is used to create a Kubernetes Deployment .

Deployments are used to manage the desired state of pods, including scaling, rolling updates, and self-healing.

C. This manifest is used to create a deploymentConfig.

Incorrect:

deploymentConfig is a concept specific to OpenShift, not standard Kubernetes. In OpenShift, deploymentConfig provides additional features like triggers and lifecycle hooks, but this manifest uses the standard Kubernetes Deployment object.

D. Four pods are created as a result of applying this manifest.

Incorrect:

The replicas: 3 field in the manifest specifies that the Deployment will create three replicas of the NGINX pod. Therefore, only three pods are created, not four.

Why These Statements?

Traffic on Port 80:

The containerPort: 80 field ensures that the NGINX application inside the pod listens on port 80. This is critical for the application to function as a web server.

Deployment Object:

The kind: Deployment field confirms that this manifest creates a Kubernetes Deployment, which manages the lifecycle of the pods.

Replica Count:

The replicas: 3 field explicitly states that three pods will be created. Any assumption of four pods is incorrect.

Additional Context:

Kubernetes Deployments:Deployments are one of the most common Kubernetes objects used to manage stateless applications. They ensure that the desired number of pod replicas is always running and can handle updates or rollbacks seamlessly.

Ports in Kubernetes:The containerPort field in the pod specification defines the port on which thecontainerized application listens. However, to expose the pods externally, a Kubernetes Service (e.g., NodePort, LoadBalancer) must be created.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes concepts, including Deployments, Pods, and networking. Understanding how Deployments work and how ports are configured is essential for managing containerized applications in cloud environments.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking and security features for Deployments like the one described in the exhibit.

Which command would you use to see which VMs are running on your KVM device?

virt-install

virsh net-list

virsh list

VBoxManage list runningvms

KVM (Kernel-based Virtual Machine) is a popular open-source virtualization technology that allows you to run virtual machines (VMs) on Linux systems. Thevirshcommand-line tool is used to manage KVM VMs. Let’s analyze each option:

A. virt-install

Incorrect:Thevirt-installcommand is used to create and provision new virtual machines. It is not used to list running VMs.

B. virsh net-list

Incorrect:Thevirsh net-listcommand lists virtual networks configured in the KVM environment. It does not display information about running VMs.

C. virsh list

Correct:Thevirsh listcommand displays the status of virtual machines managed by the KVM hypervisor. By default, it shows only running VMs. You can use the--allflag to include stopped VMs in the output.

D. VBoxManage list runningvms

Incorrect:TheVBoxManagecommand is used with Oracle VirtualBox, not KVM. It is unrelated to KVM virtualization.

Why virsh list?

Purpose-Built for KVM: virshis the standard tool for managing KVM virtual machines, andvirsh listis specifically designed to show the status of running VMs.

Simplicity:The command is straightforward and provides the required information without additional complexity.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding virtualization technologies, including KVM. Managing virtual machines using tools likevirshis a fundamental skill for operating virtualized environments.

For example, Juniper Contrail supports integration with KVM hypervisors, enabling the deployment and management of virtualized network functions (VNFs). Proficiency with KVM tools ensures efficient management of virtualized infrastructure.

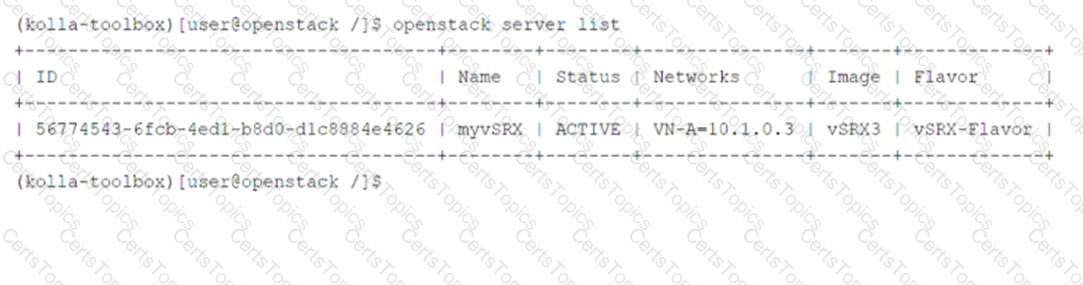

Click to the Exhibit button.

Referring to the exhibit, which two statements are correct? (Choose two.)

The myvSRX instance is using a default image.

The myvSRX instance is a part of a default network.

The myvSRX instance is created using a custom flavor.

The myvSRX instance is currently running.

Theopenstack server listcommand provides information about virtual machine (VM) instances in the OpenStack environment. Let’s analyze the exhibit and each statement:

Key Information from the Exhibit:

The output shows details about themyvSRXinstance:

Status: ACTIVE(indicating the instance is running).

Networks: VN-A-10.1.0.3(indicating the instance is part of a specific network).

Image: vSRX3(indicating the instance was created using a custom image).

Flavor: vSRX-Flavor(indicating the instance was created using a custom flavor).

Option Analysis:

A. The myvSRX instance is using a default image.

Incorrect:The image namevSRX3suggests that this is a custom image, not the default image provided by OpenStack.

B. The myvSRX instance is a part of a default network.

Incorrect:The network nameVN-A-10.1.0.3indicates that the instance is part of a specific network, not the default network.

C. The myvSRX instance is created using a custom flavor.

Correct:The flavor namevSRX-Flavorindicates that the instance was created using a custom flavor, which defines the CPU, RAM, and disk space properties.

D. The myvSRX instance is currently running.

Correct:TheACTIVEstatus confirms that the instance is currently running.

Why These Statements?

Custom Flavor:ThevSRX-Flavorname clearly indicates that a custom flavor was used to define the instance's resource allocation.

Running Instance:TheACTIVEstatus confirms that the instance is operational and available for use.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding OpenStack commands and outputs, including theopenstack server listcommand. Recognizing how images, flavors, and statuses are represented is essential for managing VM instances effectively.

For example, Juniper Contrail integrates with OpenStack Nova to provide advanced networking features for VMs, ensuring seamless operation based on their configurations.

You must provide tunneling in the overlay that supports multipath capabilities.

Which two protocols provide this function? (Choose two.)

MPLSoGRE

VXLAN

VPN

MPLSoUDP

In cloud networking, overlay networks are used to create virtualized networks that abstract the underlying physical infrastructure. To supportmultipath capabilities, certain protocols provide efficient tunneling mechanisms. Let’s analyze each option:

A. MPLSoGRE

Incorrect:MPLS over GRE (MPLSoGRE) is a tunneling protocol that encapsulates MPLS packets within GRE tunnels. While it supports MPLS traffic, it does not inherently provide multipath capabilities.

B. VXLAN

Correct:VXLAN (Virtual Extensible LAN) is an overlay protocol that encapsulates Layer 2 Ethernet frames within UDP packets. It supports multipath capabilities by leveraging the Equal-Cost Multi-Path (ECMP) routing in the underlay network. VXLAN is widely used in cloud environments for extending Layer 2 networks across data centers.

C. VPN

Incorrect:Virtual Private Networks (VPNs) are used to securely connect remote networks or users over public networks. They do not inherently provide multipath capabilities or overlay tunneling for virtual networks.

D. MPLSoUDP

Correct:MPLS over UDP (MPLSoUDP) is a tunneling protocol that encapsulates MPLS packets within UDP packets. Like VXLAN, it supports multipath capabilities by utilizing ECMP in the underlay network. MPLSoUDP is often used in service provider environments for scalable and flexible network architectures.

Why These Protocols?

VXLAN:Provides Layer 2 extension and supports multipath forwarding, making it ideal for large-scale cloud deployments.

MPLSoUDP:Combines the benefits of MPLS with UDP encapsulation, enabling efficient multipath routing in overlay networks.

JNCIA Cloud References:

The JNCIA-Cloud certification covers overlay networking protocols like VXLAN and MPLSoUDP as part of its curriculum on cloud architectures. Understanding these protocols is essential for designing scalable and resilient virtual networks.

For example, Juniper Contrail uses VXLAN to extend virtual networks across distributed environments, ensuring seamless communication and high availability.

Your e-commerce application is deployed on a public cloud. As compared to the rest of the year, it receives substantial traffic during the Christmas season.

In this scenario, which cloud computing feature automatically increases or decreases the resource based on the demand?

resource pooling

on-demand self-service

rapid elasticity

broad network access

Cloud computing provides several key characteristics that enable flexible and scalable resource management. Let’s analyze each option:

A. resource pooling

Incorrect: Resource pooling refers to the sharing of computing resources (e.g., storage, processing power) among multiple users or tenants. While important, it does not directly address the automatic scaling of resources based on demand.

B. on-demand self-service

Incorrect: On-demand self-service allows users to provision resources (e.g., virtual machines, storage) without requiring human intervention. While this is a fundamental feature of cloud computing, it does not describe the ability to automatically scale resources.

C. rapid elasticity

Correct: Rapid elasticity is the ability of a cloud environment to dynamically increase or decrease resources based on demand. This ensures that applications can scale up during peak traffic periods (e.g., Christmas season) and scale down during low-demand periods, optimizing cost and performance.

D. broad network access

Incorrect: Broad network access refers to the ability to access cloud services over the internet from various devices. While essential for accessibility, it does not describe the scaling of resources.

Why Rapid Elasticity?

Dynamic Scaling: Rapid elasticity ensures that resources are provisioned or de-provisioned automatically to meet changing workload demands.

Cost Efficiency: By scaling resources only when needed, organizations can optimize costs while maintaining performance.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes the key characteristics of cloud computing, including rapid elasticity. Understanding this concept is essential for designing scalable and cost-effective cloud architectures.

For example, Juniper Contrail supports cloud elasticity by enabling dynamic provisioning of network resources in response to changing demands.

You have built a Kubernetes environment offering virtual machine hosting using KubeVirt.

Which type of service have you created in this scenario?

Software as a Service (Saa

Platform as a Service (Paa

Infrastructure as a Service (laaS)

Bare Metal as a Service (BMaaS)

Kubernetes combined with KubeVirt enables the hosting of virtual machines (VMs) alongside containerized workloads. This setup aligns with a specific cloud service model. Let’s analyze each option:

A. Software as a Service (SaaS)

Incorrect:SaaS delivers fully functional applications over the internet, such as Salesforce or Google Workspace. Hosting VMs using Kubernetes and KubeVirt does not fall under this category.

B. Platform as a Service (PaaS)

Incorrect:PaaS provides a platform for developers to build, deploy, and manage applications without worrying about the underlying infrastructure. While Kubernetes itself can be considered a PaaS component, hosting VMs goes beyond this model.

C. Infrastructure as a Service (IaaS)

Correct: IaaSprovides virtualized computing resources such as servers, storage, and networking over the internet. By hosting VMs using Kubernetes and KubeVirt, you are offering infrastructure-level services, which aligns with the IaaS model.

D. Bare Metal as a Service (BMaaS)

Incorrect:BMaaS provides direct access to physical servers without virtualization. Kubernetes and KubeVirt focus on virtualized environments, making this option incorrect.

Why IaaS?

Virtualized Resources:Hosting VMs using Kubernetes and KubeVirt provides virtualized infrastructure, which is the hallmark of IaaS.

Scalability and Flexibility:Users can provision and manage VMs on-demand, similar to traditional IaaS offerings like AWS EC2 or OpenStack.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding cloud service models, including IaaS. Recognizing how Kubernetes and KubeVirt fit into the IaaS paradigm is essential for designing hybrid cloud solutions.

For example, Juniper Contrail integrates with Kubernetes and KubeVirt to provide advanced networking and security features for IaaS-like environments.

Which feature of Linux enables kernel-level isolation of global resources?

ring protection

stack protector

namespaces

shared libraries

Linux provides several mechanisms for isolating resources and ensuring security. Let’s analyze each option:

A. ring protection

Incorrect:Ring protection refers to CPU privilege levels (e.g., Rings 0–3) that control access to system resources. While important for security, it does not provide kernel-level isolation of global resources.

B. stack protector

Incorrect:Stack protector is a compiler feature that helps prevent buffer overflow attacks by adding guard variables to function stacks. It is unrelated to resource isolation.

C. namespaces

Correct:Namespaces are a Linux kernel feature that provideskernel-level isolationof global resources such as process IDs, network interfaces, mount points, and user IDs. Each namespace has its own isolated view of these resources, enabling features like containerization.

D. shared libraries

Incorrect:Shared libraries allow multiple processes to use the same code, reducing memory usage. They do not provide isolation or security.

Why Namespaces?

Resource Isolation:Namespaces isolate processes, networks, and other resources, ensuring that changes in one namespace do not affect others.

Containerization Foundation:Namespaces are a core technology behind containerization platforms like Docker and Kubernetes, enabling lightweight and secure environments.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Linux fundamentals, including namespaces, as part of its containerization curriculum. Understanding namespaces is essential for managing containerized workloads in cloud environments.

For example, Juniper Contrail leverages namespaces to isolate network resources in containerized environments, ensuring secure and efficient operation.

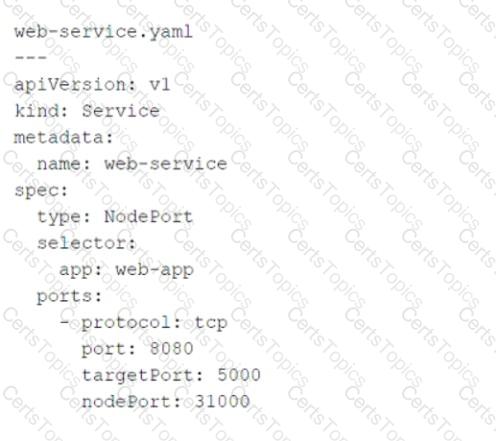

Click the Exhibit button.

Referring to the exhibit, which port number would external users use to access the WEB application?

80

8080

31000

5000

The YAML file provided in the exhibit defines a KubernetesServiceobject of typeNodePort. Let’s break down the key components of the configuration and analyze how external users access the WEB application:

Key Fields in the YAML File:

type: NodePort:

This specifies that the service is exposed on a static port on each node in the cluster. External users can access the service using the node's IP address and the assignednodePort.

port: 8080:

This is the port on which the service is exposed internally within the Kubernetes cluster. Other services or pods within the cluster can communicate with this service using port8080.

targetPort: 5000:

This is the port on which the actual application (WEB application) is running inside the pod. The service forwards traffic fromport: 8080totargetPort: 5000.

nodePort: 31000:

This is the port on the node (host machine) where the service is exposed externally. External users will use this port to access the WEB application.

How External Users Access the WEB Application:

External users access the WEB application using the node's IP address and thenodePortvalue (31000).

The Kubernetes service listens on this port and forwards incoming traffic to the appropriate pods running the WEB application.

Why Not Other Options?

A. 80:Port80is commonly used for HTTP traffic, but it is not specified in the YAML file. The service does not expose port80externally.

B. 8080:Port8080is the internal port used within the Kubernetes cluster. It is not the port exposed to external users.

D. 5000:Port5000is the target port where the application runs inside the pod. It is not directly accessible to external users.

Why 31000?

NodePort Service Type:TheNodePortservice type exposes the application on a high-numbered port (default range: 30000–32767) on each node in the cluster.

External Accessibility:External users must use thenodePortvalue (31000) along with the node's IP address to access the WEB application.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes networking concepts, including service types likeClusterIP,NodePort, andLoadBalancer. Understanding howNodePortservices work is essential for exposing applications to external users in Kubernetes environments.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking features, such as load balancing and network segmentation, for services like the one described in the exhibit.

You are asked to deploy a cloud solution for a customer that requires strict control over their resources and data. The deployment must allow the customer to implement and manage precise security controls to protect their data.

Which cloud deployment model should be used in this situation?

private cloud

hybrid cloud

dynamic cloud

public cloud

Cloud deployment models define how cloud resources are provisioned and managed. The four main models are:

Public Cloud:Resources are shared among multiple organizations and managed by a third-party provider. Examples include AWS, Microsoft Azure, and Google Cloud Platform.

Private Cloud:Resources are dedicated to a single organization and can be hosted on-premises or by a third-party provider. Private clouds offer greater control over security, compliance, and resource allocation.

Hybrid Cloud:Combines public and private clouds, allowing data and applications to move between them. This model provides flexibility and optimization of resources.

Dynamic Cloud:Not a standard cloud deployment model. It may refer to the dynamic scaling capabilities of cloud environments but is not a recognized category.

In this scenario, the customer requires strict control over their resources and data, as well as the ability to implement and manage precise security controls. Aprivate cloudis the most suitable deployment model because:

Dedicated Resources:The infrastructure is exclusively used by the organization, ensuring isolation and control.

Customizable Security:The organization can implement its own security policies, encryption mechanisms, and compliance standards.

On-Premises Option:If hosted internally, the organization retains full physical control over the data center and hardware.

Why Not Other Options?

Public Cloud:Shared infrastructure means less control over security and compliance. While public clouds offer robust security features, they may not meet the strict requirements of the customer.

Hybrid Cloud:While hybrid clouds combine the benefits of public and private clouds, they introduce complexity and may not provide the level of control the customer desires.

Dynamic Cloud:Not a valid deployment model.

JNCIA Cloud References:

The JNCIA-Cloud certification covers cloud deployment models and their use cases. Private clouds are highlighted as ideal for organizations with stringent security and compliance requirements, such as financial institutions, healthcare providers, and government agencies.

For example, Juniper Contrail supports private cloud deployments by providing advanced networking and security features, enabling organizations to build and manage secure, isolated cloud environments.

Which two CPU flags indicate virtualization? (Choose two.)

lvm

vmx

xvm

kvm

CPU flags indicate hardware support for specific features, including virtualization. Let’s analyze each option:

A. lvm

Incorrect: LVM (Logical Volume Manager) is a storage management technology used in Linux systems. It is unrelated to CPU virtualization.

B. vmx

Correct: The vmx flag indicates Intel Virtualization Technology (VT-x), which provides hardware-assisted virtualization capabilities. This feature is essential for running hypervisors like VMware ESXi, KVM, and Hyper-V.

C. xvm

Incorrect: xvm is not a recognized CPU flag for virtualization. It may be a misinterpretation or typo.

D. kvm

Correct: The kvm flag indicates Kernel-based Virtual Machine (KVM) support, which is a Linux kernel module that leverages hardware virtualization extensions (e.g., Intel VT-x orAMD-V) to run virtual machines. While kvm itself is not a CPU flag, it relies on hardware virtualization features like vmx (Intel) or svm (AMD).

Why These Answers?

Hardware Virtualization Support: Both vmx (Intel VT-x) and kvm (Linux virtualization) are directly related to CPU virtualization. These flags enable efficient execution of virtual machines by offloading tasks to the CPU.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding virtualization technologies, including hardware-assisted virtualization. Recognizing CPU flags like vmx and kvm is crucial for deploying and troubleshooting virtualized environments.

For example, Juniper Contrail integrates with hypervisors like KVM to manage virtualized workloads in cloud environments. Ensuring hardware virtualization support is a prerequisite for deploying such solutions.

Which command should you use to obtain low-level information about Docker objects?

docker info

docker inspect

docker container

docker system

Docker provides various commands to manage and interact with Docker objects such as containers, images, networks, and volumes. To obtainlow-level informationabout these objects, thedocker inspectcommand is used. Let’s analyze each option:

A. docker info <OBJECT_NAME>

Incorrect:Thedocker infocommand provides high-level information about the Docker daemon itself, such as the number of containers, images, and system-wide configurations. It does not provide detailed information about specific Docker objects.

B. docker inspect <OBJECT_NAME>

Correct:Thedocker inspectcommand retrieves low-level metadata and configuration details about Docker objects (e.g., containers, images, networks, volumes). This includes information such as IP addresses, mount points, environment variables, and network settings. It outputs the data in JSON format for easy parsing and analysis.

C. docker container <OBJECT_NAME>

Incorrect:Thedocker containercommand is a parent command for managing containers (e.g.,docker container ls,docker container start). It does not directly provide low-level information about a specific container.

D. docker system <OBJECT_NAME>

Incorrect:Thedocker systemcommand is used for system-wide operations, such as pruning unused resources (docker system prune) or viewing disk usage (docker system df). It does not provide low-level details about specific Docker objects.

Why docker inspect?

Detailed Metadata: docker inspectis specifically designed to retrieve comprehensive, low-level information about Docker objects.

Versatility:It works with multiple object types, including containers, images, networks, and volumes.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Docker as part of its containerization curriculum. Understanding how to use Docker commands likedocker inspectis essential for managing and troubleshooting containerized applications in cloud environments.

For example, Juniper Contrail integrates with container orchestration platforms like Kubernetes, which rely on Docker for container management. Proficiency with Docker commands ensures effective operation and debugging of containerized workloads.

Which component of Kubernetes runs on all nodes and ensures that the containers are running in a pod?

kubelet

kube-proxy

container runtime

kube controller

Kubernetes components work together to ensure the proper functioning of the cluster and its workloads. Let’s analyze each option:

A. kubelet

Correct:

Thekubeletis a critical Kubernetes component that runs on every node in the cluster. It is responsible for ensuring that containers are running in pods as expected. The kubelet communicates with the container runtime to start, stop, and monitor containers based on the pod specifications provided by the control plane.

B. kube-proxy

Incorrect:

Thekube-proxyis a network proxy that runs on each node and manages network communication for services and pods. It ensures proper load balancing and routing of traffic but does not directly manage the state of containers or pods.

C. container runtime

Incorrect:

Thecontainer runtime(e.g.,containerd,cri-o) is responsible for running containers on the node. While it executes the containers, it does not ensure that the containers are running as part of a pod. This responsibility lies with the kubelet.

D. kube controller

Incorrect:

Thekube controlleris part of the control plane and ensures that the desired state of the cluster (e.g., number of replicas) is maintained. It does not run on all nodes and does not directly manage the state of containers in pods.

Why kubelet?

Pod Lifecycle Management:The kubelet ensures that the containers specified in a pod's definition are running and healthy. If a container crashes, the kubelet restarts it.

Node-Level Agent:The kubelet acts as the primary node agent, interfacing with the container runtime and the Kubernetes API server to manage pod lifecycle operations.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes architecture, including the role of the kubelet. Understanding how the kubelet works is essential for managing the health and operation of pods in Kubernetes clusters.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking features, relying on the kubelet to manage pod lifecycle events effectively.

Which OpenStack service provides API client authentication?

Keystone

Nova

Heal

Neutron

OpenStack is an open-source cloud computing platform that provides various services for managing infrastructure resources. Let’s analyze each option:

A. Keystone

Correct: Keystone is the OpenStack service responsible for identity management and API client authentication . It provides authentication, authorization, and service discovery for other OpenStack services.

B. Nova

Incorrect: Nova is the OpenStack compute service that manages virtual machines and bare-metal servers. It does not handle authentication or API client validation.

C. Heat

Incorrect: Heat is the OpenStack orchestration service that automates the deployment and management of infrastructure resources using templates. It does not provide authentication services.

D. Neutron

Incorrect: Neutron is the OpenStack networking service that manages virtual networks, routers, and IP addresses. It is unrelated to API client authentication.

Why Keystone?

Authentication and Authorization: Keystone ensures that only authorized users and services can access OpenStack resources by validating credentials and issuing tokens.

Service Discovery: Keystone also provides a catalog of available OpenStack services and their endpoints, enabling seamless integration between components.

JNCIA Cloud References:

The JNCIA-Cloud certification covers OpenStack services, including Keystone, as part of its cloud infrastructure curriculum. Understanding Keystone’s role in authentication is essential for managing secure OpenStack deployments.

For example, Juniper Contrail integrates with OpenStack Keystone to authenticate and authorize network resources, ensuring secure and efficient operation.

Which Linux protection ring is the least privileged?

0

1

2

3

In Linux systems, the concept of protection rings is used to define levels of privilege for executing processes and accessing system resources. These rings are part of the CPU's architecture and provide a mechanism for enforcing security boundaries between different parts of the operating system and user applications. There are typically four rings in the x86 architecture, numbered from 0 to 3:

Ring 0 (Most Privileged):This is the highest level of privilege, reserved for the kernel and critical system functions. The operating system kernel operates in this ring because it needs unrestricted access to hardware resources and control over the entire system.

Ring 1 and Ring 2:These intermediate rings are rarely used in modern operating systems. They can be utilized for device drivers or other specialized purposes, but most operating systems, including Linux, do not use these rings extensively.

Ring 3 (Least Privileged):This is the least privileged ring, where user-level applications run. Applications running in Ring 3 have limited access to system resources and must request services from the kernel (which runs in Ring 0) via system calls. This ensures that untrusted or malicious code cannot directly interfere with the core system operations.

Why Ring 3 is the Least Privileged:

Isolation:User applications are isolated from the core system functions to prevent accidental or intentional damage to the system.

Security:By restricting access to hardware and sensitive system resources, the risk of vulnerabilities or exploits is minimized.

Stability:Running applications in Ring 3 ensures that even if an application crashes or behaves unexpectedly, it does not destabilize the entire system.

JNCIA Cloud References:

The Juniper Networks Certified Associate - Cloud (JNCIA-Cloud) curriculum emphasizes understanding virtualization, cloud architectures, and the underlying technologies that support them. While the JNCIA-Cloud certification focuses more on Juniper-specific technologies like Contrail, it also covers foundational concepts such as virtualization, Linux, and cloud infrastructure.

In the context of virtualization and cloud environments, understanding the role of protection rings is important because:

Hypervisors often run in Ring 0 to manage virtual machines (VMs).

VMs themselves run in a less privileged ring (e.g., Ring 3) to ensure isolation between the guest operating systems and the host system.

For example, in a virtualized environment like Juniper Contrail, the hypervisor (e.g., KVM) manages the execution of VMs. The hypervisor operates in Ring 0, while the guest OS and applications within the VM operate in Ring 3. This separation ensures that the VMs are securely isolated from each other and from the host system.

Thus, the least privileged Linux protection ring isRing 3, where user applications execute with restricted access to system resources.

Which OpenStack service displays server details of the compute node?

Keystone

Neutron

Cinder

Nova

OpenStack provides various services to manage cloud infrastructure resources, including compute nodes and virtual machines (VMs). Let’s analyze each option:

A. Keystone

Incorrect: Keystoneis the OpenStack identity service responsible for authentication and authorization. It does not display server details of compute nodes.

B. Neutron

Incorrect: Neutronis the OpenStack networking service that manages virtual networks, routers, and IP addresses. It is unrelated to displaying server details of compute nodes.

C. Cinder

Incorrect: Cinderis the OpenStack block storage service that provides persistent storage volumes for VMs. It does not display server details of compute nodes.

D. Nova

Correct: Novais the OpenStack compute service responsible for managing the lifecycle of virtual machines, including provisioning, scheduling, and monitoring. It also provides detailed information about compute nodes and VMs, such as CPU, memory, and disk usage.

Why Nova?

Compute Node Management:Nova manages compute nodes and provides APIs to retrieve server details, including resource utilization and VM status.

Integration with CLI/REST APIs:Commands likeopenstack server showornova hypervisor-showcan be used to display compute node and VM details.

JNCIA Cloud References:

The JNCIA-Cloud certification covers OpenStack services, including Nova, as part of its cloud infrastructure curriculum. Understanding Nova’s role in managing compute resources is essential for operating OpenStack environments.

For example, Juniper Contrail integrates with OpenStack Nova to provide advanced networking and security features for compute nodes and VMs.

Copyright © 2021-2025 CertsTopics. All Rights Reserved